Volume 15, Issue 6 And S7 (Artificial Intelligence 2025)

J Research Health 2025, 15(6 And S7): 705-722 |

Back to browse issues page

Ethics code: 0

Download citation:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

Bagheri Sheykhangafshe F, Tajbakhsh K, Pour Saeid V, Gharibi Loroun S, Shah Hosseini M. Effectiveness of Artificial Intelligence Interventions in the Treatment of Psychological Disorders: A Systematic Review. J Research Health 2025; 15 (6) :705-722

URL: http://jrh.gmu.ac.ir/article-1-2850-en.html

URL: http://jrh.gmu.ac.ir/article-1-2850-en.html

Farzin Bagheri Sheykhangafshe1

, Khazar Tajbakhsh2

, Khazar Tajbakhsh2

, Vahid Pour Saeid3

, Vahid Pour Saeid3

, Shima Gharibi Loroun4

, Shima Gharibi Loroun4

, Mahtab Shah Hosseini5

, Mahtab Shah Hosseini5

, Khazar Tajbakhsh2

, Khazar Tajbakhsh2

, Vahid Pour Saeid3

, Vahid Pour Saeid3

, Shima Gharibi Loroun4

, Shima Gharibi Loroun4

, Mahtab Shah Hosseini5

, Mahtab Shah Hosseini5

1- Department of Psychology, Faculty of Humanities, Tarbiat Modares University, Tehran, Iran. , farzinbagheri73@gmail.com

2- Department of Psychology, Faculty of Psychology, University of Milano-Bicocca, Milan, Italy.

3- Department of Clinical Psychology, TMS.C., Islamic Azad University, Tabriz, Iran.

4- Department of Clinical Psychology, ARD.C., Islamic Azad University, Ardabil, Iran.

5- Department of Clinical Psychology, GAR.C., Islamic Azad University, Garmsar, Iran.

2- Department of Psychology, Faculty of Psychology, University of Milano-Bicocca, Milan, Italy.

3- Department of Clinical Psychology, TMS.C., Islamic Azad University, Tabriz, Iran.

4- Department of Clinical Psychology, ARD.C., Islamic Azad University, Ardabil, Iran.

5- Department of Clinical Psychology, GAR.C., Islamic Azad University, Garmsar, Iran.

Keywords: Artificial intelligence (AI), Digital mental health, Psychological disorders, Machine learning, Mental health technology

Full-Text [PDF 1163 kb]

(185 Downloads)

| Abstract (HTML) (1930 Views)

Full-Text: (29 Views)

Introduction

The prevalence of psychological disorders continues to rise globally, imposing substantial burdens on individuals, families, and healthcare systems [1]. Mental health disorders, such as major depressive disorder, generalized anxiety disorder, bipolar disorder, and schizophrenia not only diminish quality of life but also contribute to increased morbidity, disability, and mortality worldwide [2]. Despite advances in psychopharmacology and psychotherapy, traditional mental health care delivery remains challenged by delays in diagnosis, heterogeneity of clinical presentations, limited access to specialized care, and variability in treatment response [3]. These challenges necessitate innovative approaches to enhance early detection, optimize personalized treatment, and improve overall clinical outcomes [4]. Against this backdrop, artificial intelligence (AI) has emerged as a transformative technology with the potential to fundamentally reshape mental health diagnosis, intervention, and management [5].

AI refers to the development of computer systems capable of performing tasks that typically require human intelligence, including learning, reasoning, problem-solving, and pattern recognition [6]. In mental health care, AI encompasses various subfields, such as machine learning (ML), natural language processing (NLP), computer vision, and deep neural networks [7]. These technologies enable the extraction and analysis of complex and high-dimensional data from diverse sources, including electronic health records (EHRs), neuroimaging scans, genetic profiles, speech and behavioral patterns, and digital phenotyping via smartphones and wearable devices [8]. By leveraging this data, AI systems can identify subtle clinical markers, predict disease trajectories, and tailor interventions with unprecedented precision, thereby offering an evidence-based complement to traditional clinician judgment [9].

The increasing global burden of mental disorders is compounded by a significant shortage of qualified mental health professionals, particularly in low- and middle-income countries, and in underserved rural areas [10]. This treatment gap leads to underdiagnosis, delayed interventions, and poor prognosis for many patients [11]. Digital mental health technologies powered by AI offer scalable and cost-effective solutions capable of extending the reach of care beyond traditional clinical settings [12]. For instance, AI-driven mobile applications and conversational agents (chatbots) provide continuous symptom monitoring, psychoeducation, cognitive behavioral therapy (CBT), and crisis support accessible anytime and anywhere [13]. These modalities not only improve patient engagement and adherence but also reduce the stigma and logistical barriers often associated with face-to-face therapy [14].

Despite the significant potential of AI to revolutionize psychological care, its integration raises critical ethical, legal, and practical concerns [15]. The use of sensitive mental health data necessitates robust privacy protections and transparent data governance frameworks to maintain patient confidentiality and trust [16]. Algorithmic bias and lack of representativeness in training datasets can perpetuate health disparities and reduce the validity of AI applications across diverse demographic and cultural populations [17]. Additionally, overreliance on AI could inadvertently diminish the therapeutic alliance, a core factor in successful psychological treatment, by sidelining human empathy and nuanced clinical decision-making [18]. Therefore, ethical frameworks, rigorous validation studies, and clinician training programs are essential to safeguard patient welfare and maximize the benefits of AI-assisted interventions [19].

In recent years, a burgeoning number of empirical studies have investigated the efficacy and utility of AI-based interventions for psychological disorders [20, 21]. Research has demonstrated AI’s capability to improve diagnostic accuracy through automated pattern recognition in neuroimaging and speech analysis, facilitate early identification of prodromal symptoms, and enhance treatment personalization through adaptive algorithms that adjust therapeutic content based on real-time patient feedback [22]. Randomized controlled trials (RCTs) and observational studies have also reported positive outcomes in symptom reduction and functional improvement using AI-facilitated digital therapeutics [23]. However, heterogeneity in study methodologies, sample populations, intervention modalities, and outcome measures poses challenges for drawing definitive conclusions, underscoring the need for systematic synthesis and critical appraisal [24, 25].

Given the rapid integration of AI into healthcare, it is both urgent and essential to understand its true impact on the treatment of psychological disorders [26]. Despite notable technological advances, significant gaps persist regarding the efficacy, safety, and ethical considerations of AI-driven mental health interventions [27]. This systematic review sought to critically synthesize the existing empirical evidence to clarify the benefits, limitations, and practical challenges of AI applications within this field. By doing so, it aimed to equip clinicians, researchers, and policymakers with the knowledge needed to make informed, evidence-based decisions that optimize therapeutic outcomes while safeguarding patient rights and well-being. Ultimately, this work highlights the importance of responsible innovation—balancing cutting-edge technological advancements with compassionate, human-centered mental health care. Through a comprehensive analysis, this review evaluated the effectiveness of AI interventions in treating psychological disorders by drawing upon recent empirical findings.

Methods

Study design

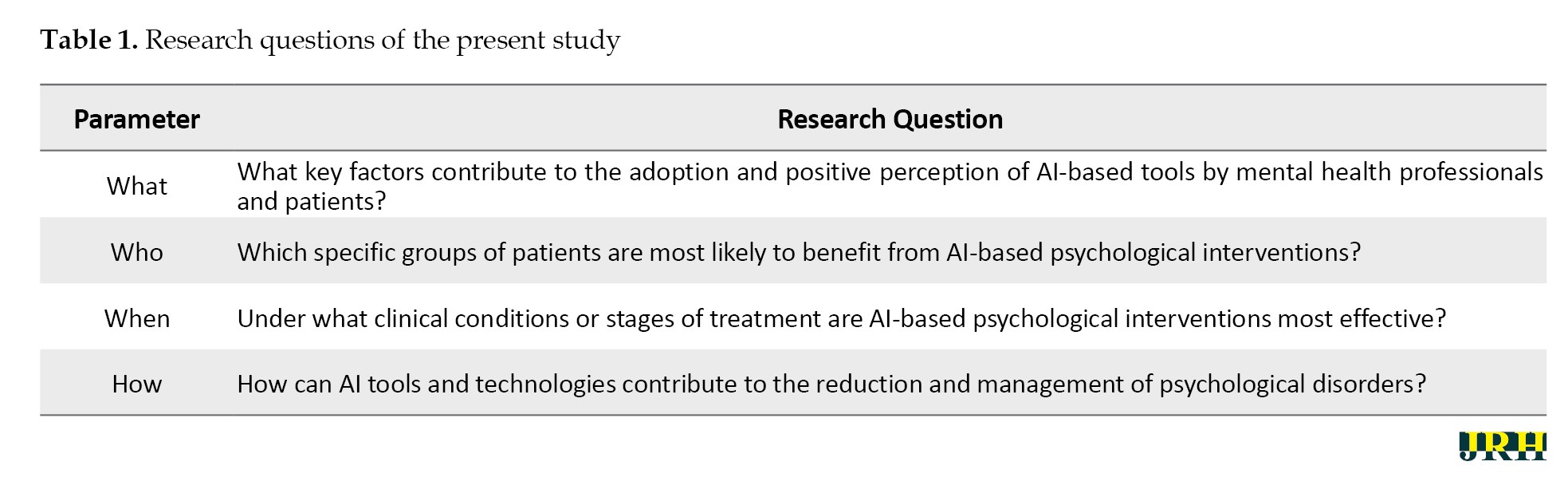

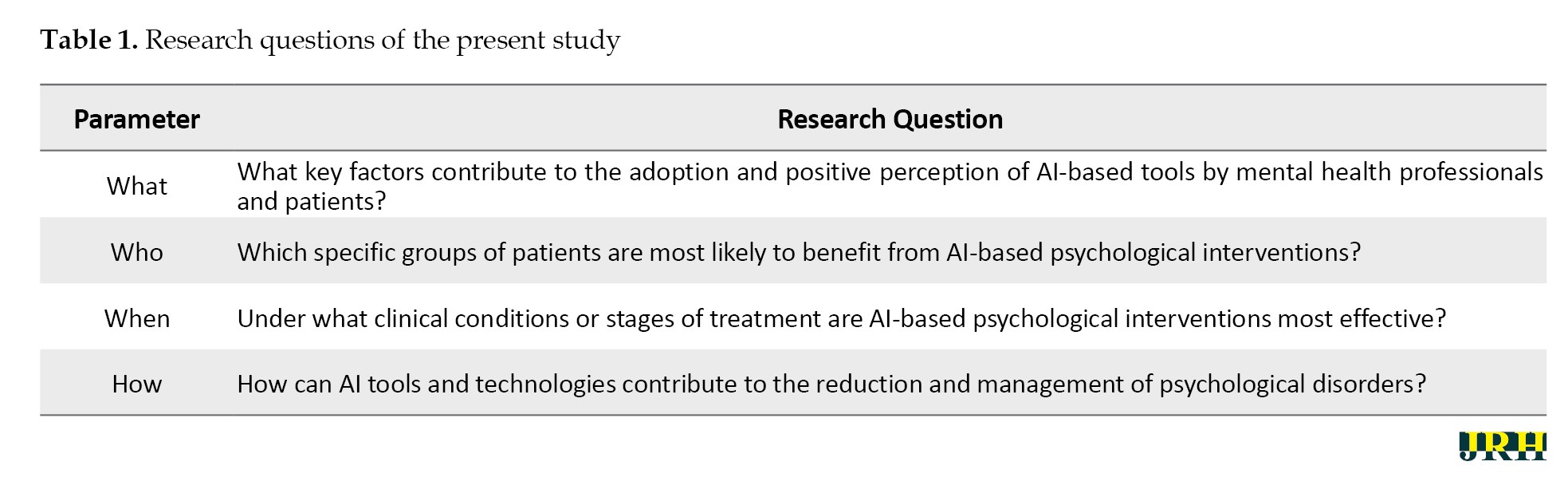

The present study is a systematic review conducted following the PRISMA [28] framework, aiming to investigate existing research on the effectiveness of AI interventions in the treatment of psychological disorders. The research was guided by a structured set of questions addressing key dimensions, such as purpose, target population, timing, and mechanisms of AI-based psychological interventions (Table 1).

Search strategy

A systematic search was conducted using specialized keywords, such as “artificial intelligence,” “machine learning,” “digital mental health,” “psychological disorders,” and “AI-based interventions” across several English-language scientific databases, including Google Scholar, PubMed, ProQuest, Embase, PsycINFO, and Scopus, covering the period from January 2017 to April 2025.

Eligibility criteria

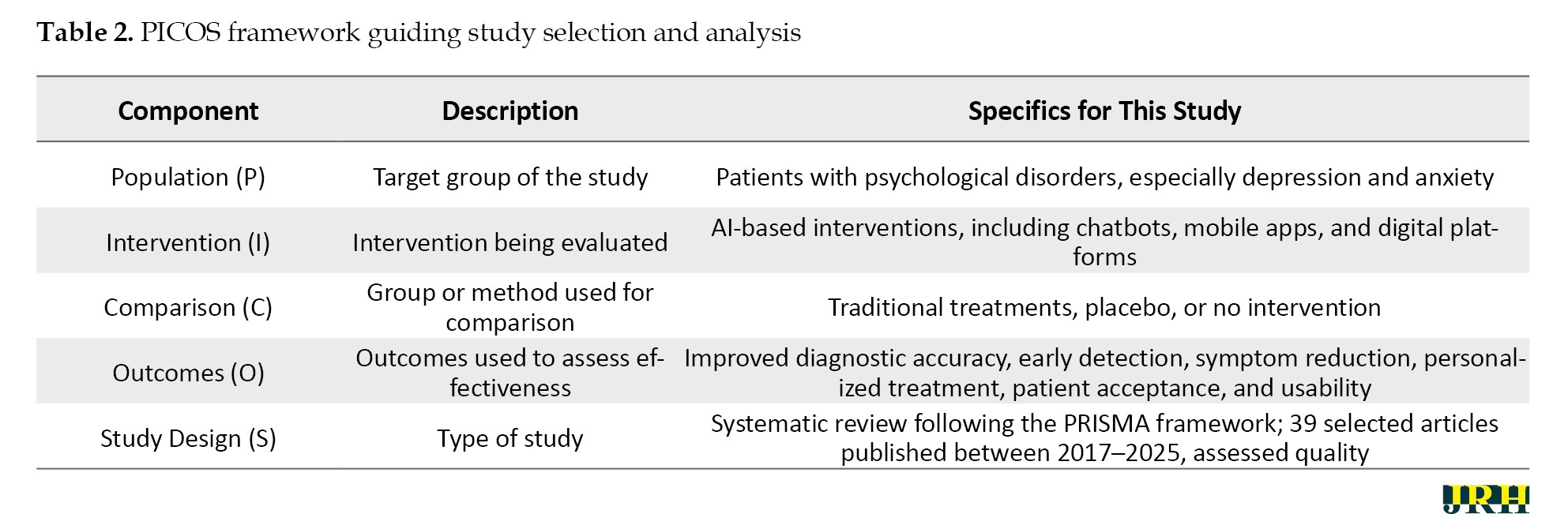

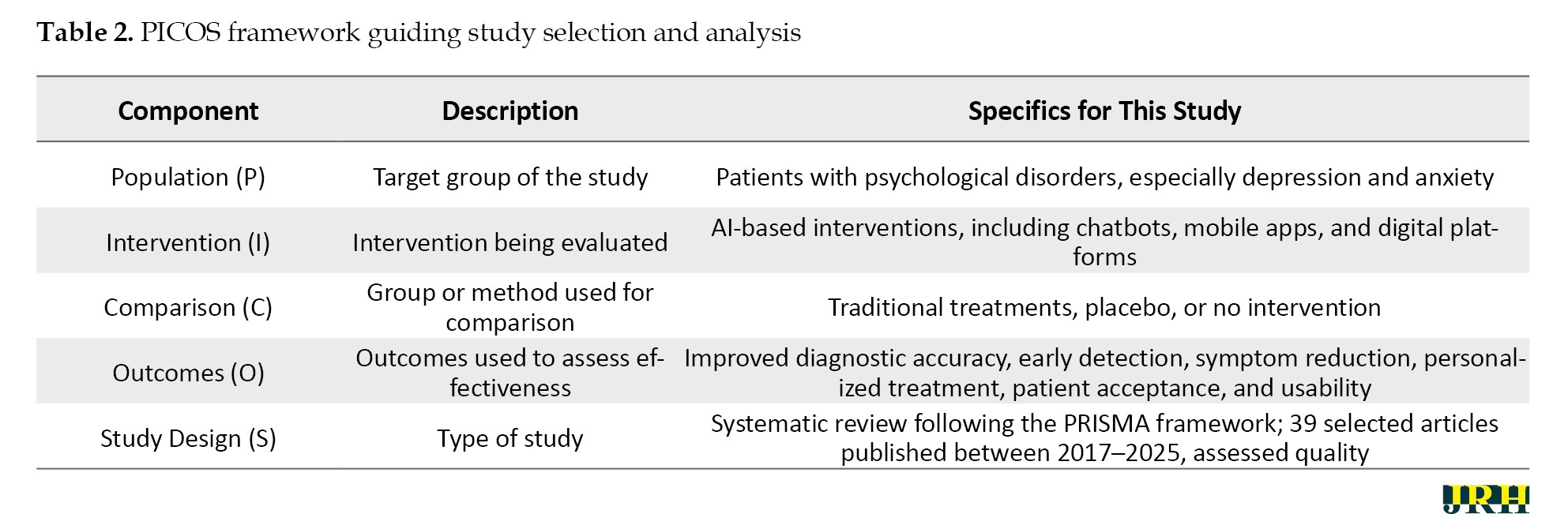

Studies were included if they targeted patients with major depressive disorders and anxiety symptoms and assessed the success of AI-operated solutions in the diagnosis, prevention, or treatment of psychological syndromes. Only articles that utilized quantitative, qualitative, semi-experimental, or experimental designs were included. Additionally, the published works had to meet the criteria for qualified research and be available in full-text format in English. Through this stringent inclusion system, the analyzed information consisted solely of high-quality evidence focused specifically on the role of AI in mental health interventions as guided by the PICOS framework (Table 2).

Risk of bias assessment

The risk of bias among the studies included was also independently assessed by different researchers using commonly available standardized checklists according to the study design used. In the case of quantitative studies, the evaluation criteria were selection bias, performance bias, detection bias, attrition bias, and reporting bias. Qualitative studies were evaluated based on credibility, transferability, dependability, and confirmability. Semi-experimental and experimental trials were also evaluated and rated in terms of randomization and albeit concealment of allocation, blindness, completeness of outcome data, and selective reporting. Conditions where there were discrepancies among reviewers were addressed through discussions and consensus. Studies with a high risk of bias according to the critical areas were impounded, and their impact on the overall findings of the review was considered, with the transparency and methodological rigor being upheld.

Effect measures

The strategy facilitating the assessment of the effect of AI-based interventions was critically evaluated based on the combination of quantitative and qualitative metrics. To conduct quantitative research, the effect measures were accuracy, specificity, sensitivity, area under the curve (AUC), predictive values, mean differences, and effective sizes wherever possible. Thematic analysis, vote of participants, and response behavior or cognitive changes were used in evaluating qualitative studies. To ensure consistency, comparability, and reproducibility of the effect measures across studies, all effect measures were abstracted onto a structured data extraction form. The methodology presented an effective process of synthesizing AI effectiveness and enabled useful cross-study comparisons irrespective of methodological details.

Quality assessment

All retrieved articles were evaluated using keyword searches focused on neuropsychological factors associated with brain abnormalities in patients with major depressive disorder accompanied by anxiety symptoms. To ensure thoroughness, the reference lists of eligible studies were also reviewed for additional relevant sources. Each of the 39 selected articles was independently analyzed by the researchers, who extracted data using a structured content analysis form. Any discrepancies were resolved through consensus, with strict adherence to the inclusion criteria to ensure that only studies addressing the effectiveness of AI interventions in treating psychological disorders were included.

The quality of each study was assessed using a standardized checklist covering various criteria, including the alignment of article structure with the type of study, clarity of research objectives, characteristics of the study population, sampling methods, data collection instruments, appropriate statistical analyses, defined inclusion and exclusion criteria, ethical considerations, accurate reporting of results in line with objectives, and discussion grounded in prior literature [29]. Based on established methodological standards, articles were rated on a binary scale (0 or 1) across relevant criteria for quantitative (6 criteria), qualitative (11 criteria), semi-experimental (8 criteria), and experimental (7 criteria) studies. Studies were excluded if they scored ≤4 in quantitative research, ≤6 in experimental or semi-experimental designs, or ≤8 in qualitative studies [30].

Data collection and extraction

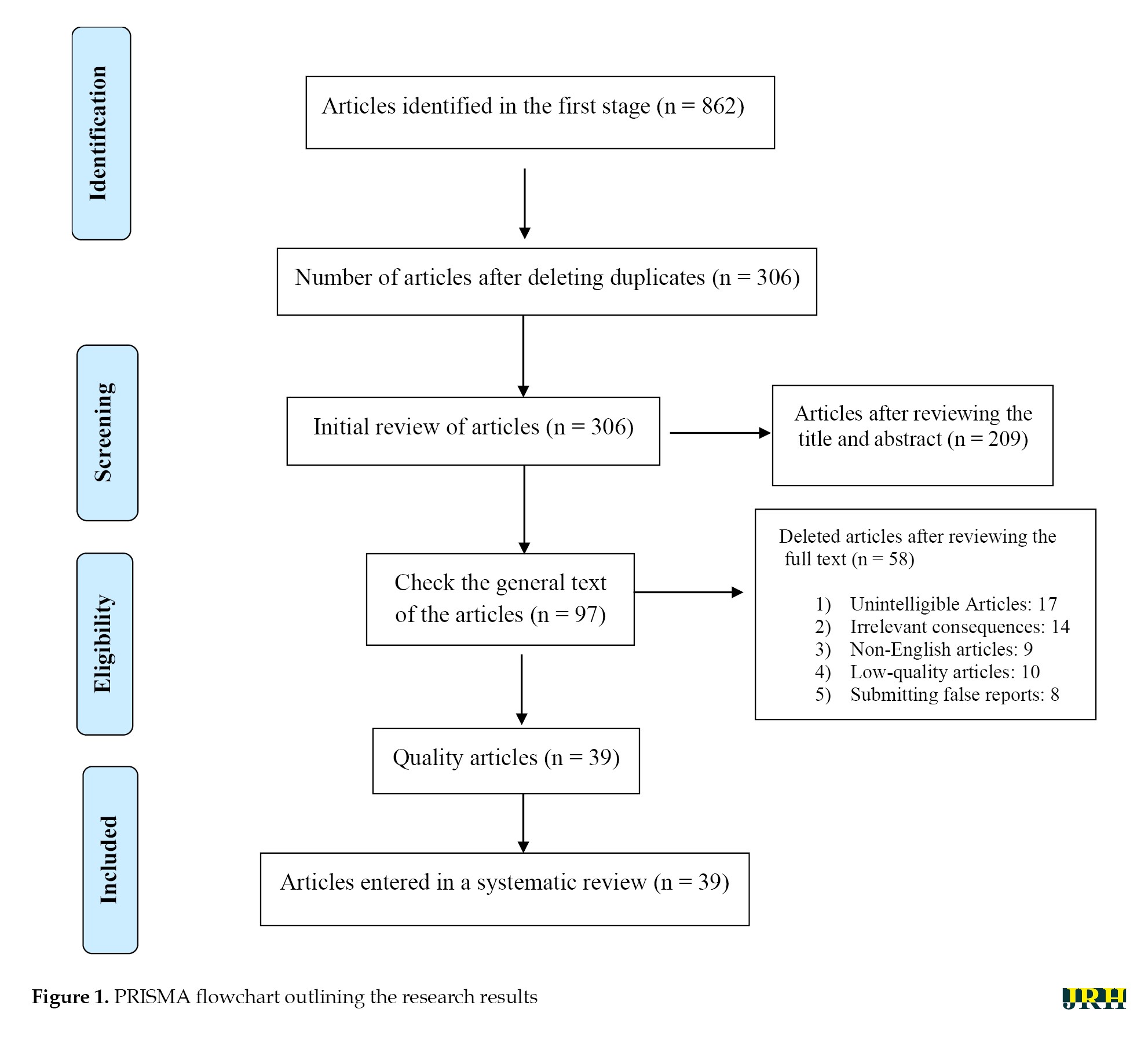

The abstracts of 862 published articles were initially screened, and duplicate entries were systematically removed through multiple stages. Following this rigorous selection and quality assessment process, a total of 39 articles were identified for comprehensive review and data extraction (Figure 1).

Results

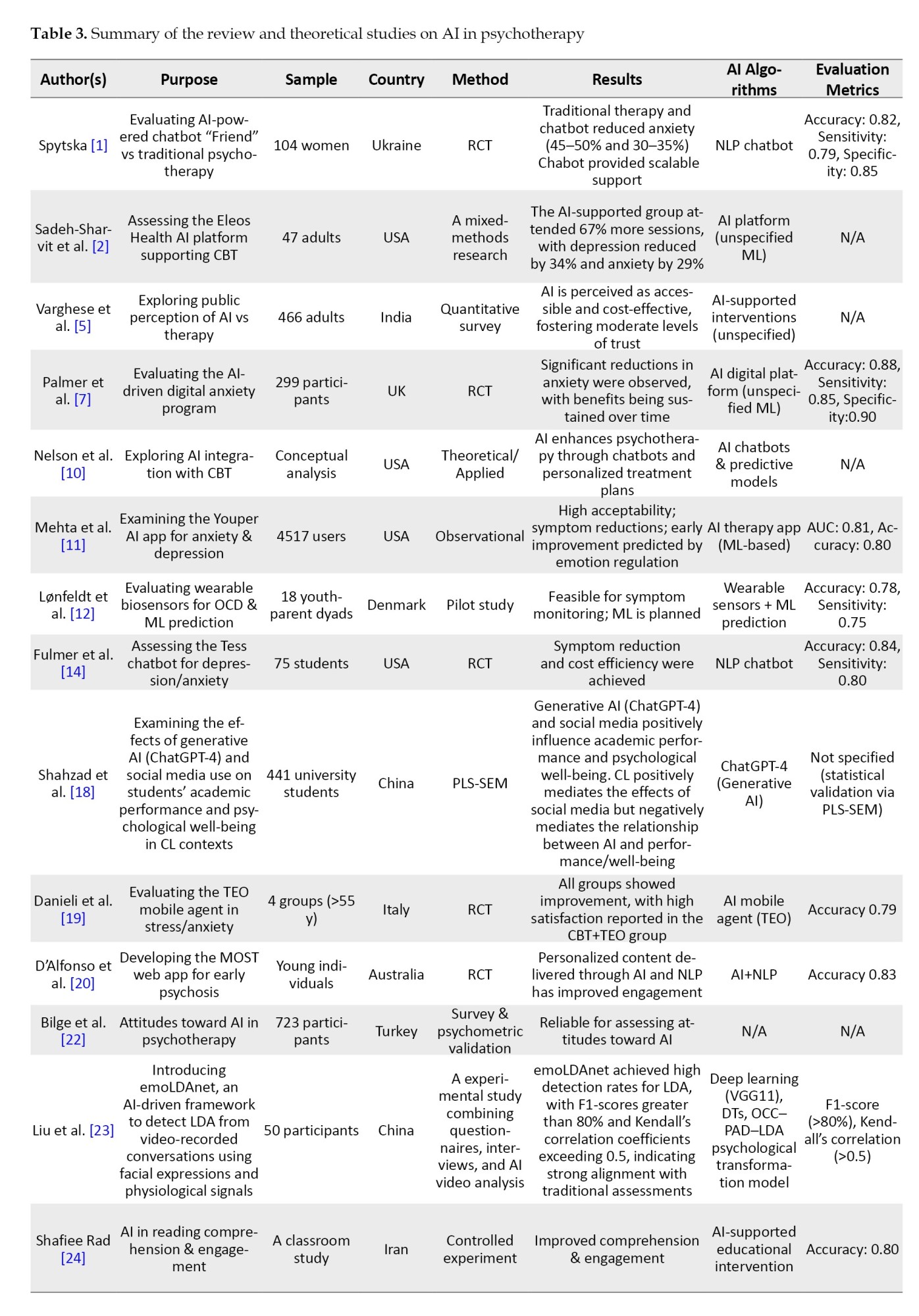

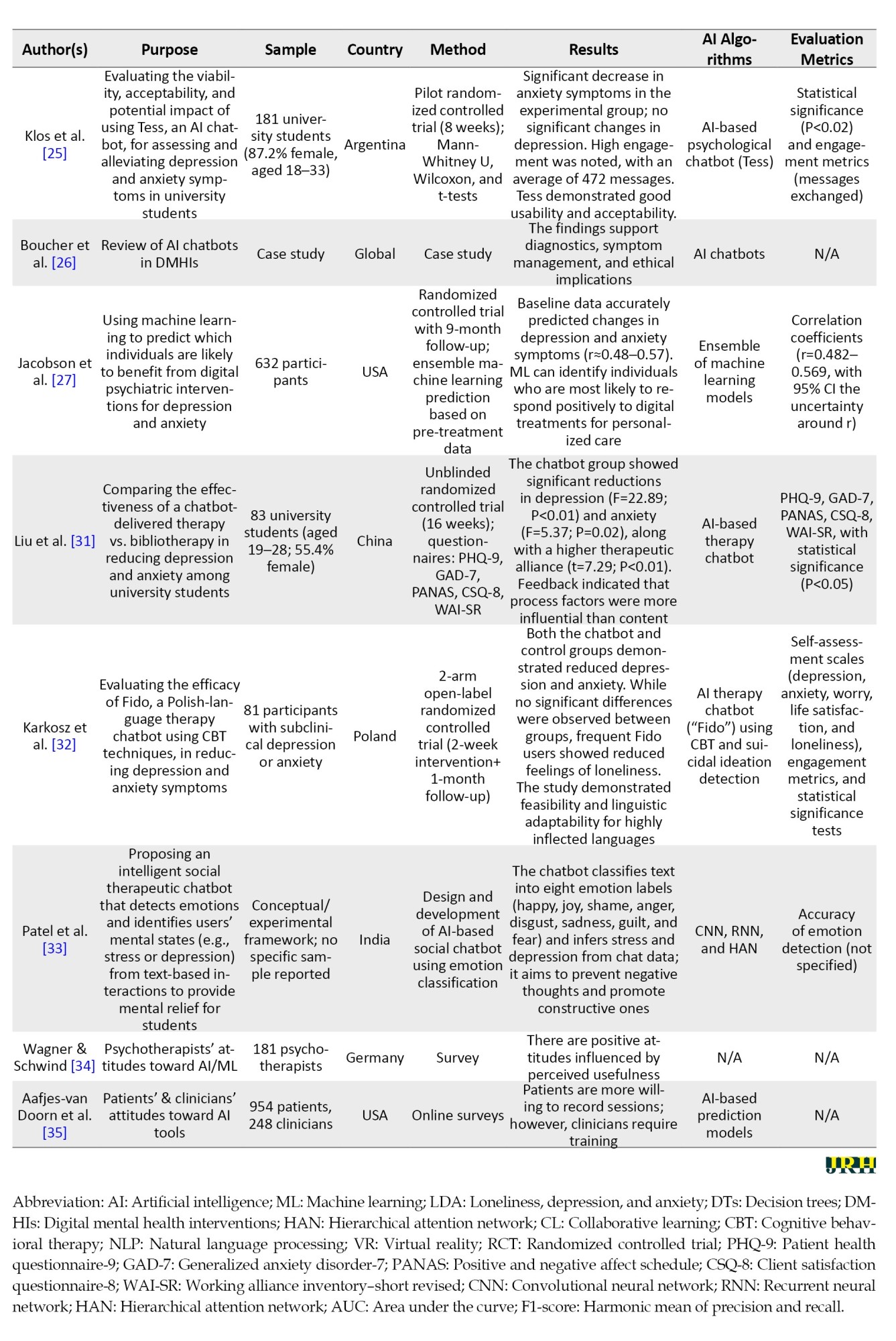

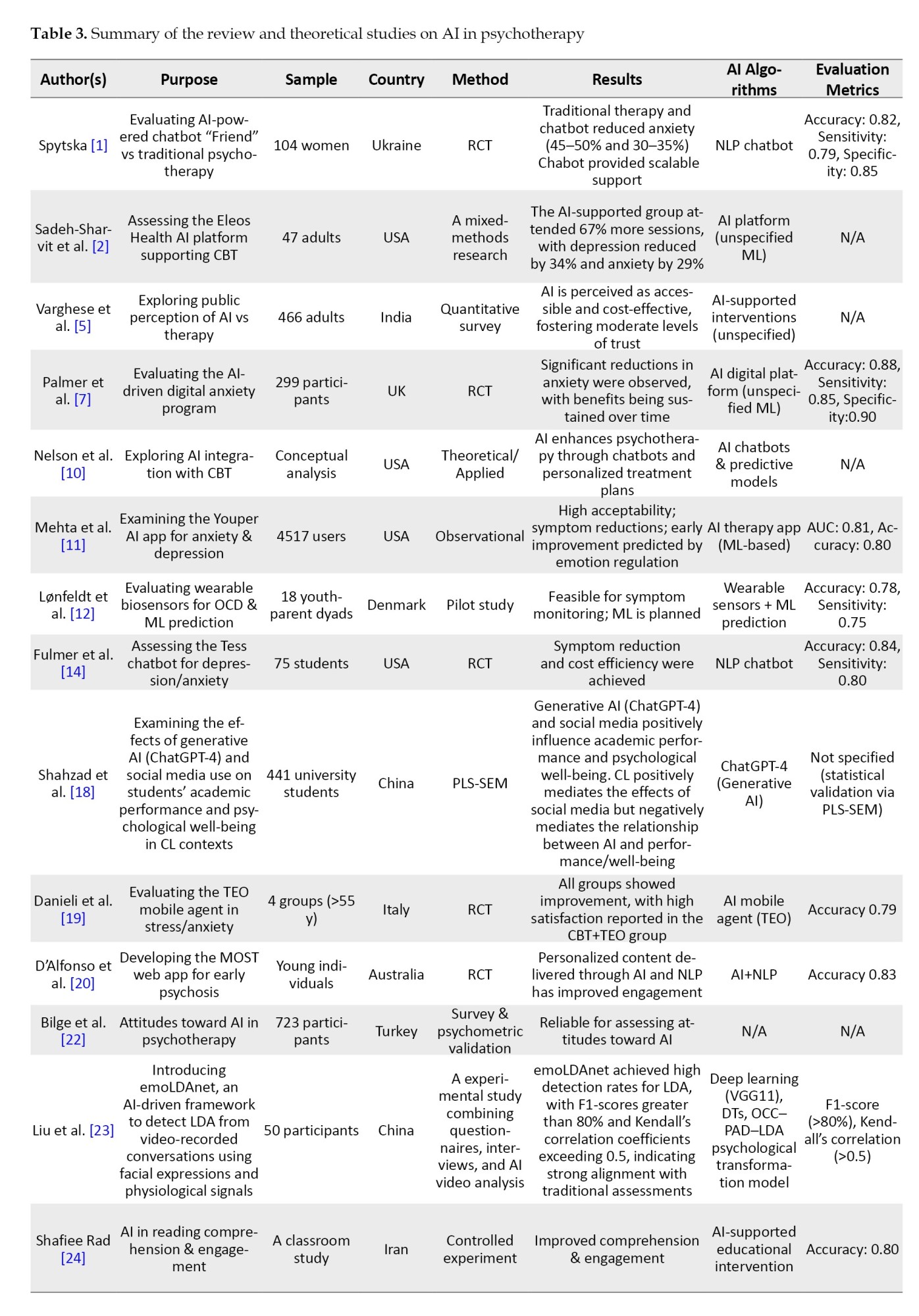

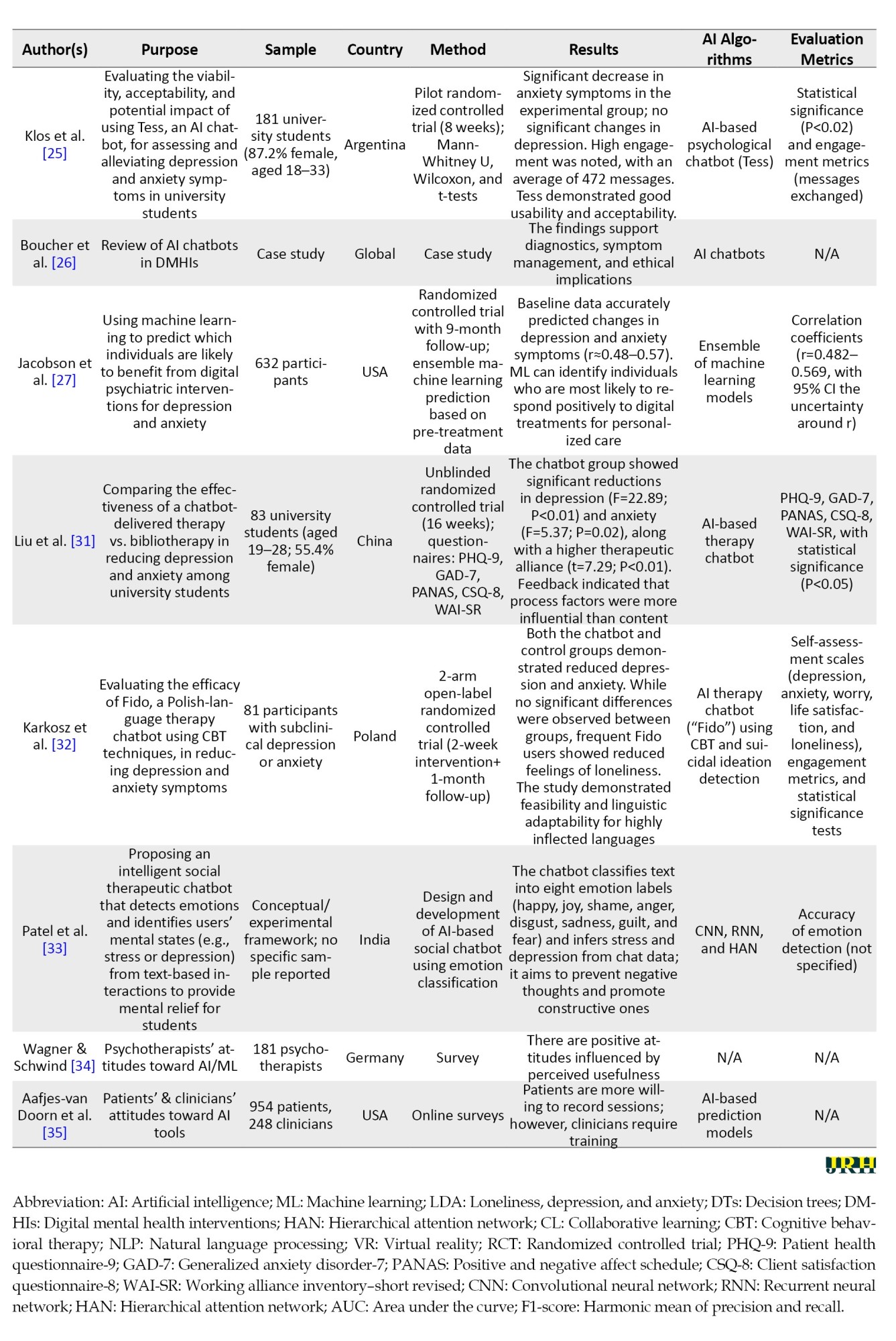

The present study systematically reviewed all available English-language articles addressing the effectiveness of AI-based interventions in the treatment of psychological disorders. Table 3 presents a comprehensive summary of these 39 studies, including the authors, purpose, sample characteristics, country or scope, research methodology, and key findings.

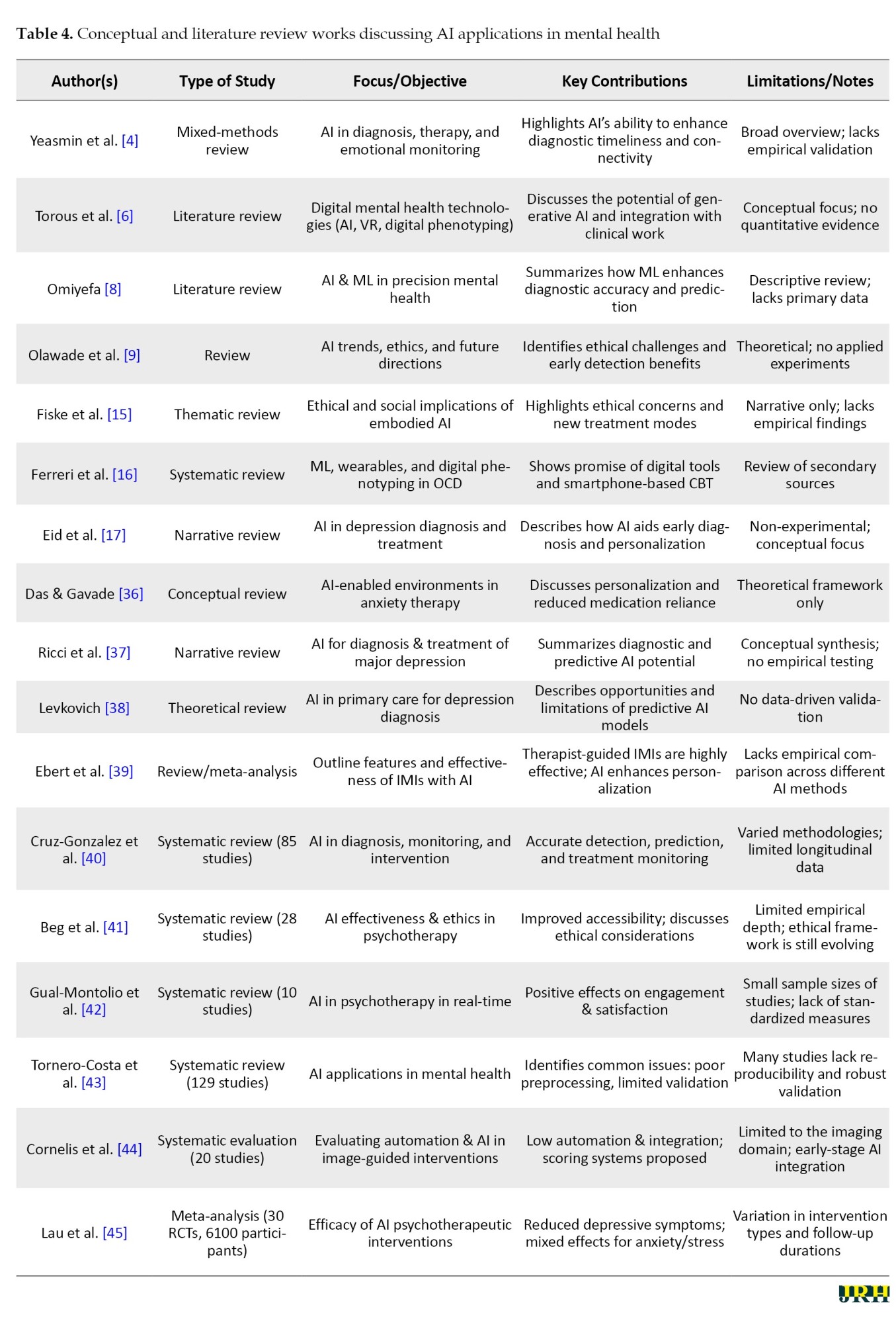

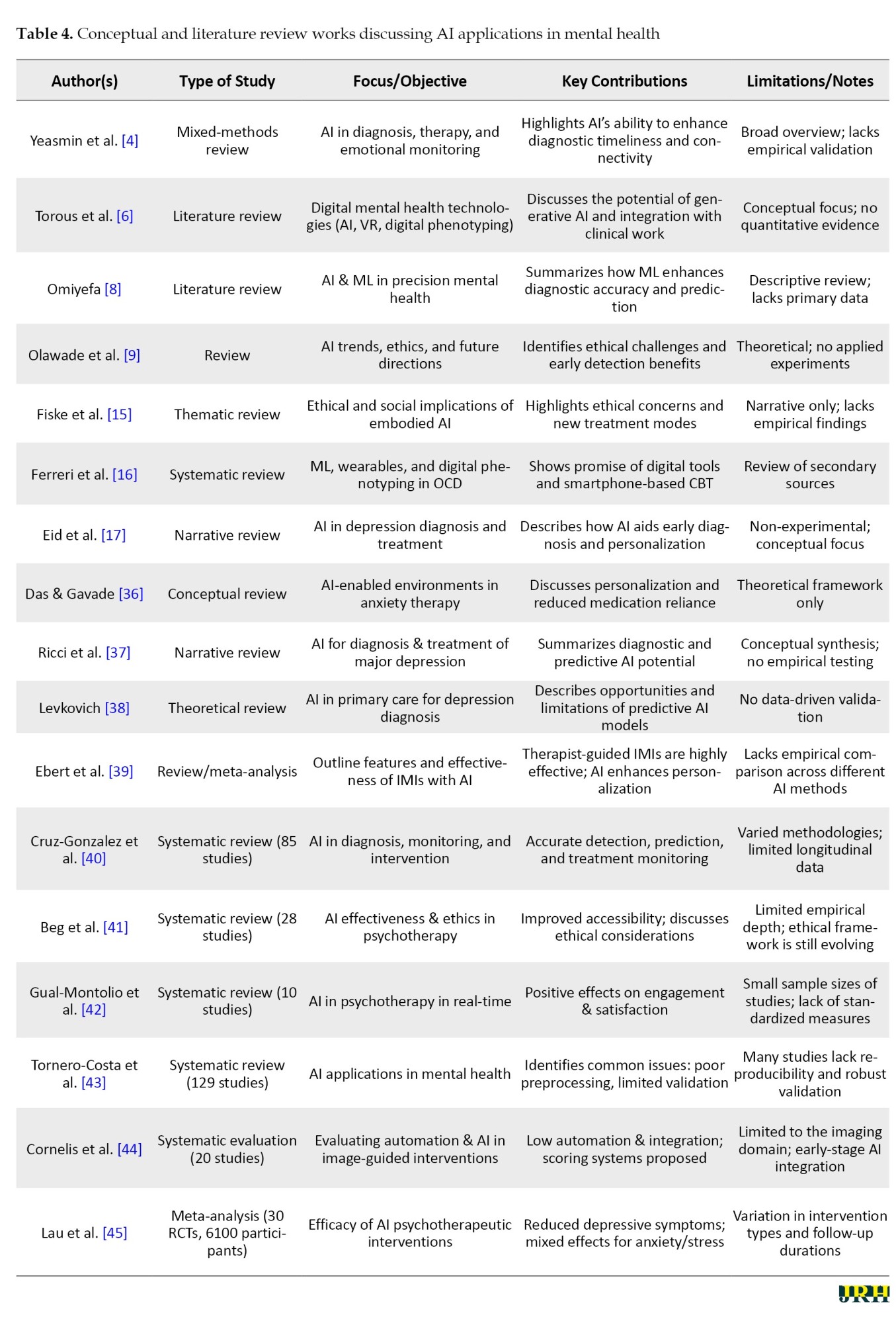

Tables 3 and 4 collectively illustrate two complementary strands of research on the integration of AI in psychotherapy.

Table 3 encompasses empirical and data-driven studies that provide measurable evidence of AI’s efficacy in clinical and therapeutic contexts, employing RCTs, observational designs, and psychometric validations to evaluate performance indicators, such as accuracy, sensitivity, and treatment outcomes. In contrast, Table 4 compiles conceptual and review-based works that delineate the theoretical, ethical, and methodological foundations of AI in mental health, yet largely lack experimental validation. This juxtaposition highlights a clear research gap: while empirical studies demonstrate the practical feasibility of AI tools, they often omit deeper theoretical framing, whereas conceptual studies discuss ethical and systemic implications without empirical support. The present study positions itself at the intersection of these two domains, empirically addressing the conceptual and ethical challenges identified in prior reviews and thereby contributing novel, evidence-based insights into the application of AI within psychotherapeutic practice.

Study characteristics

This review encompassed 39 studies from a diverse range of countries, including Iran, the United States, Germany, Denmark, China, India, the United Kingdom, Poland, Australia, Italy, Turkey, Ukraine, and multiple studies with a global or multi-country scope. The study designs span RCTs, cross-sectional surveys, systematic and narrative reviews, conceptual analyses, and feasibility studies. Sample sizes vary widely, ranging from small clinical groups (e.g. 9 to 104 participants) to large populations exceeding thousands (e.g. 4,500 or more app users). The diversity of study designs and populations reflects the broad interest in exploring the integration of AI into mental health diagnostics, treatment, and psychotherapy support worldwide.

Key findings of AI interventions

Key findings consistently highlight the promising potential of AI and ML technologies in enhancing mental health care. AI-powered tools demonstrated improved diagnostic accuracy, facilitated the early detection of depressive and anxiety disorders, and enabled personalized treatment plans based on patient-specific data, including behavioral markers, genetic information, and clinical history. Several studies reported significant reductions in anxiety and depression symptoms with AI-supported interventions, including digital programs, chatbots, and mobile apps, which often offered scalability, accessibility, and cost-effectiveness compared to traditional therapy. Importantly, many studies emphasize the complementary role of AI alongside human clinicians, advocating for hybrid models that leverage AI’s strengths without undermining the essential human connection in psychotherapy.

Despite these advances, multiple studies caution against overreliance on AI, underscoring challenges, such as ethical concerns, data privacy, potential biases in algorithms, and the need for rigorous validation and clinician training. Attitudes toward AI varied, with some clinicians expressing apprehension about replacement fears, while patients often showed greater willingness to engage with AI-based tools, particularly in measurement and monitoring tasks. The literature calls for enhanced education and transparent frameworks to facilitate ethical and effective AI integration, ensuring that technological advancements augment rather than replace human-centered care in mental health practice.

Challenges and future directions

The area of AI has massive potential to transform the delivery of mental health care by allowing prompt diagnosis, individualized therapies, and larger-scale support. There are, however, several critical challenges that are to be sorted out so that safe, effective, and equitable implementation can be realized. Arranging these problems into separate spheres can serve to make it clear of existing limitations are and what future research is.

Ethics and privacy issues

Questions about AI use as mental development tools, such as patient privacy, data security, informed consent, and possible algorithm bias, are highly contentious [11, 15, 23]. Clinical and behavioral sensitive data should be governed with transparency, as it is essential to safeguard users and maintain integrity. Standardized principles of ethical guidelines and regulatory frameworks should be established for AI in mental health devices. It is important to encourage interpretable and recordable AI models, so that both clinicians and patients can understand and challenge decisions. Moreover, future research should be conducted in a culturally sensitive manner to reduce the risk of bias and ensure that interventions are fair to various groups of people.

Methodological heterogeneity and validation

The available literature has a great range of discrepancies in design, sample, AI algorithms, or outcome measures, which deems in comparability and synthesis of meta-analysis [3, 12, 17, 32]. Concise sample sizes supporting small samples, the temporary nature of follow-ups, and a lack of uniformity in reporting hinder confidence in generalizability and clinical applicability. Comprehensive, multi-site, longitudinal studies using a standardized protocol for data collection, evaluation, and reporting are needed. The focus must be on stringent validation of AI models, repeated research, and pre-regulation of research protocols to ensure methodological transparency, reproducibility, and reliable research outcomes.

Clinician education and development

Inadequate knowledge and awareness among the clinical staff members hinder the program implementation of AI tools and integration with an established process, which can decrease usability and engagement [8, 34]. Without proper training, clinicians may underutilize AI suggestions or misinterpret them, negatively affecting patient care. Systematic clinical training capabilities should be designed, including AI literacy, decision support interpretation, and automated workflow integration. Additionally, hybrid care models should be explored that do not replace but support human skills, examining their effectiveness in terms of clinician efficiency, patient outcomes, and therapeutic relationships.

Explainable AI in clinical care: Enhancing trust and performance

Black-box AI models that do not provide explanations for their behavior undermine clinician and patient confidence, ultimately diminishing clinical performance [20, 31]. Ethical deployment requires transparency and facilitation of the therapeutic alliance. It is essential to develop explainable and interpretable AI systems where the validity of predictions and recommendations is clearly provided. Future research must focus on the impact of model transparency on clinicians’ thought processes, patient compliance rates, and overall involvement in the care process. The philosophy of user-centered design needs to be implemented to make AI interventions acceptable and usable for various populations.

Justice, marginalization, and discrimination

Inequality in access to digital health technologies is a possible contributor to further inequalities, particularly among underserved or under-resourced settings and culturally diverse populations [21, 32]. By default, it is possible that AI interventions may favor privileged populations. There should be a focus on inclusive AI design that considers socio-economic, cultural, and geographic diversity. It is crucial to determine the long-term sustainability, scalability, and cost-effectiveness of these interventions to ensure widespread access. Further studies are also required to examine ways to reduce digital literacy barriers and to incorporate feedback from underrepresented users, thus enhancing both usage and performance.

Effects on the long-term results and sustainability

Most publications focus on short-term results, and it is unclear whether AI interventions are durable. Understanding the future effects is essential to determine clinical utility and inform implementation plans [32, 33]. Longitudinal studies should be conducted to assess the continuation of clinical benefits, engagement, and behavioral changes over time. It is important to discuss interventions under real-life conditions, their scalability, their impact on healthcare systems, and potential opportunities for cost savings.

To provide context for the current study, a comparison with previous review papers is presented in Table 5.

This table summarizes key aspects of earlier studies, including the main objective of each review, the type of medical data used, the AI models applied, citation counts, and other relevant information.

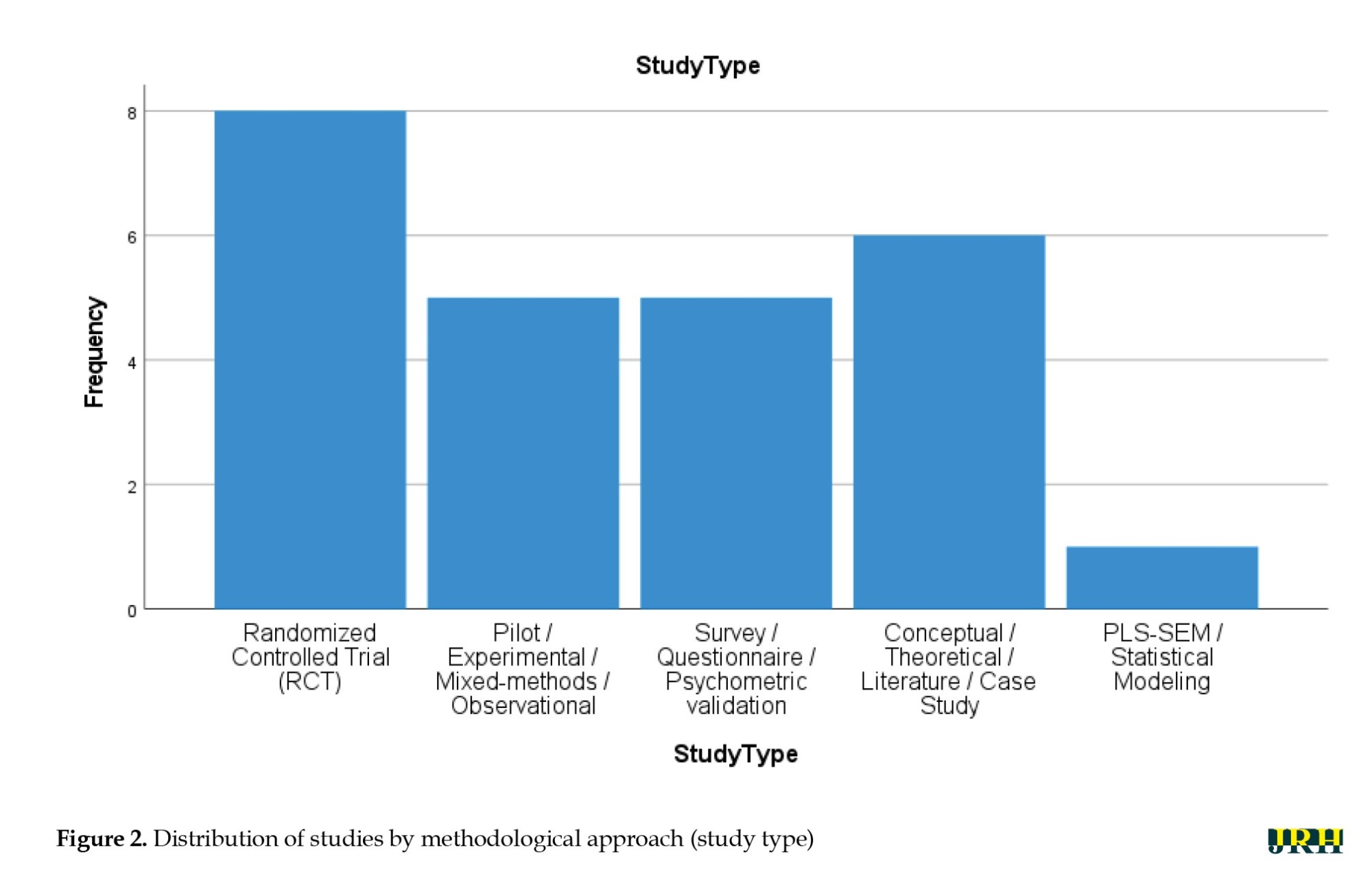

Figure 2 displays the overall methodological composition of the reviewed studies. RCTs accounted for the largest proportion (n=8), demonstrating the growing empirical validation of AI-assisted psychotherapy tools. Five studies employed pilot, experimental, mixed-methods, or observational designs, reflecting exploratory and developmental efforts in this emerging domain. Another five studies relied primarily on survey or questionnaire-based data, including psychometric validations assessing user or clinician attitudes toward AI. Six papers were conceptual, theoretical, or literature-based, indicating strong theoretical grounding and ethical discourse surrounding AI in psychotherapy. Finally, one study utilized partial least squares structural equation modeling (PLS-SEM), representing an advanced statistical approach to evaluating the psychological and academic effects of generative AI.

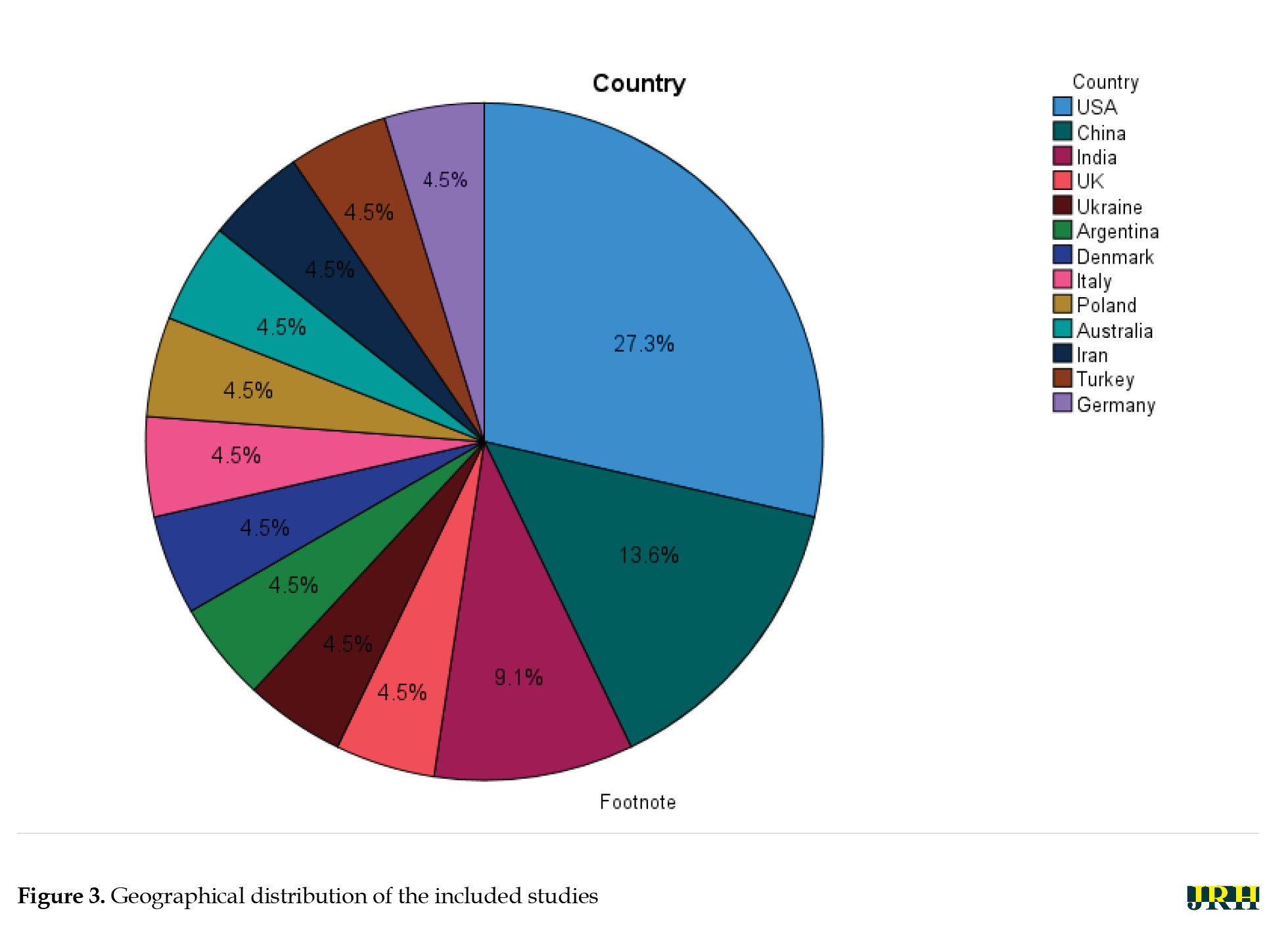

Figure 3 illustrates the international distribution of research on AI applications in psychotherapy and mental health. Out of the 22 primary empirical studies analyzed, the United States accounted for the largest share (6 studies; 27.3%), followed by China (3 studies; 13.6%) and India (2 studies; 9.1%). Single studies were conducted in the United Kingdom, Ukraine, Argentina, Denmark, Italy, Poland, Australia, Iran, Turkey, and Germany (each 1 study; 4.5%). In addition, one study (4.5%) was classified as “Global/International,” encompassing data from multiple regions or cross-cultural contexts. This geographical distribution demonstrates growing global engagement with AI-assisted psychotherapy, though a notable concentration remains in Western and East Asian research contexts.

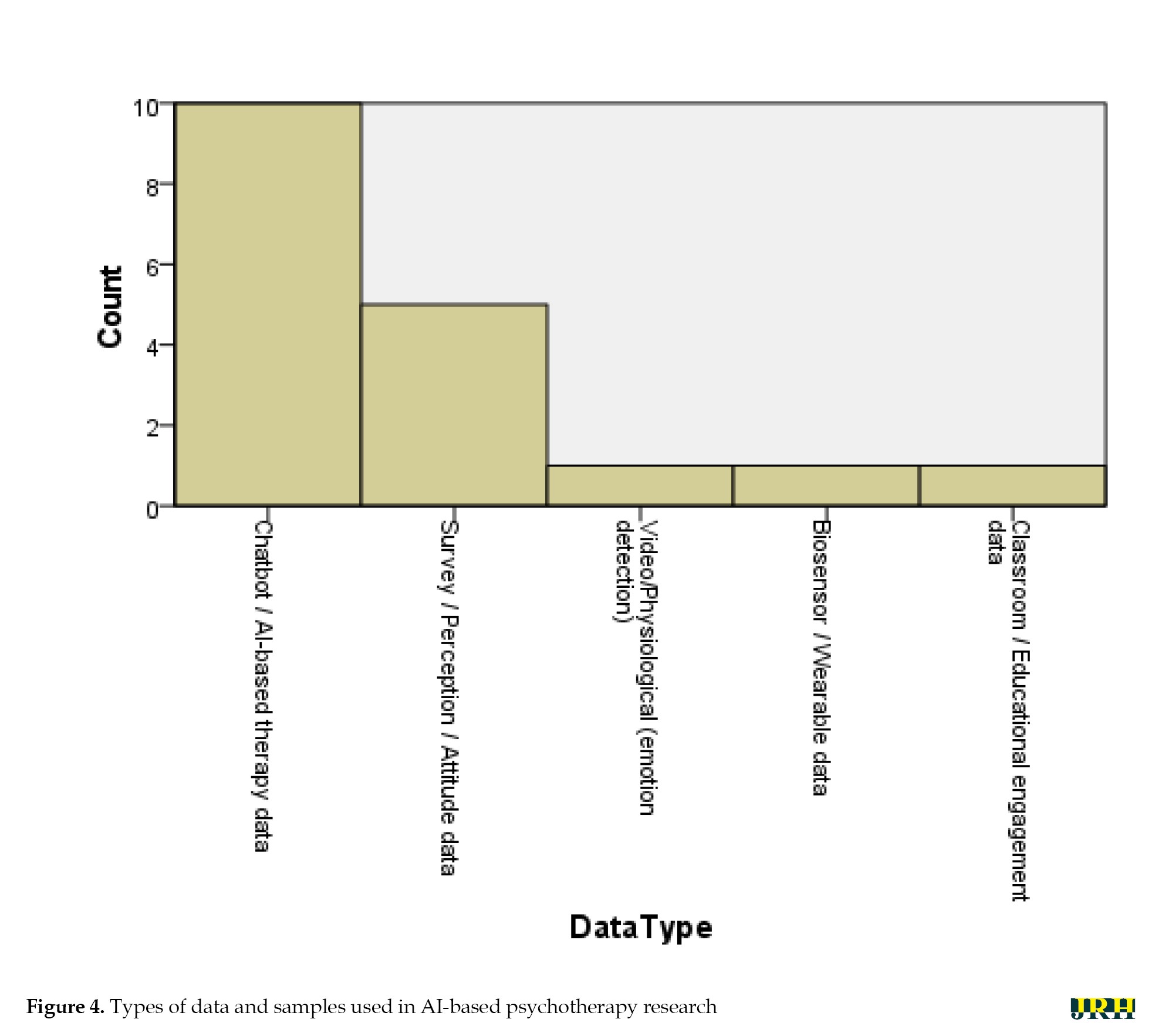

Figure 4 summarizes the distribution of data sources and participant samples across the included studies. Chatbot- or AI-based therapy data constituted the majority (n=10; 45.5%), reflecting the growing prominence of conversational agents in clinical and subclinical mental health interventions. Survey-, perception-, or attitude-based data were used in five studies (22.7%), primarily to assess user and clinician acceptance, ethical concerns, and perceived utility of AI tools. One study (4.5%) employed multimodal video and physiological data to detect emotional states through advanced computational models, while one study (4.5%) utilized wearable biosensor data for predictive modeling in obsessive-compulsive disorder. Additionally, one study (4.5%) analyzed classroom and educational engagement data, demonstrating the versatility of AI applications beyond traditional psychotherapy contexts.

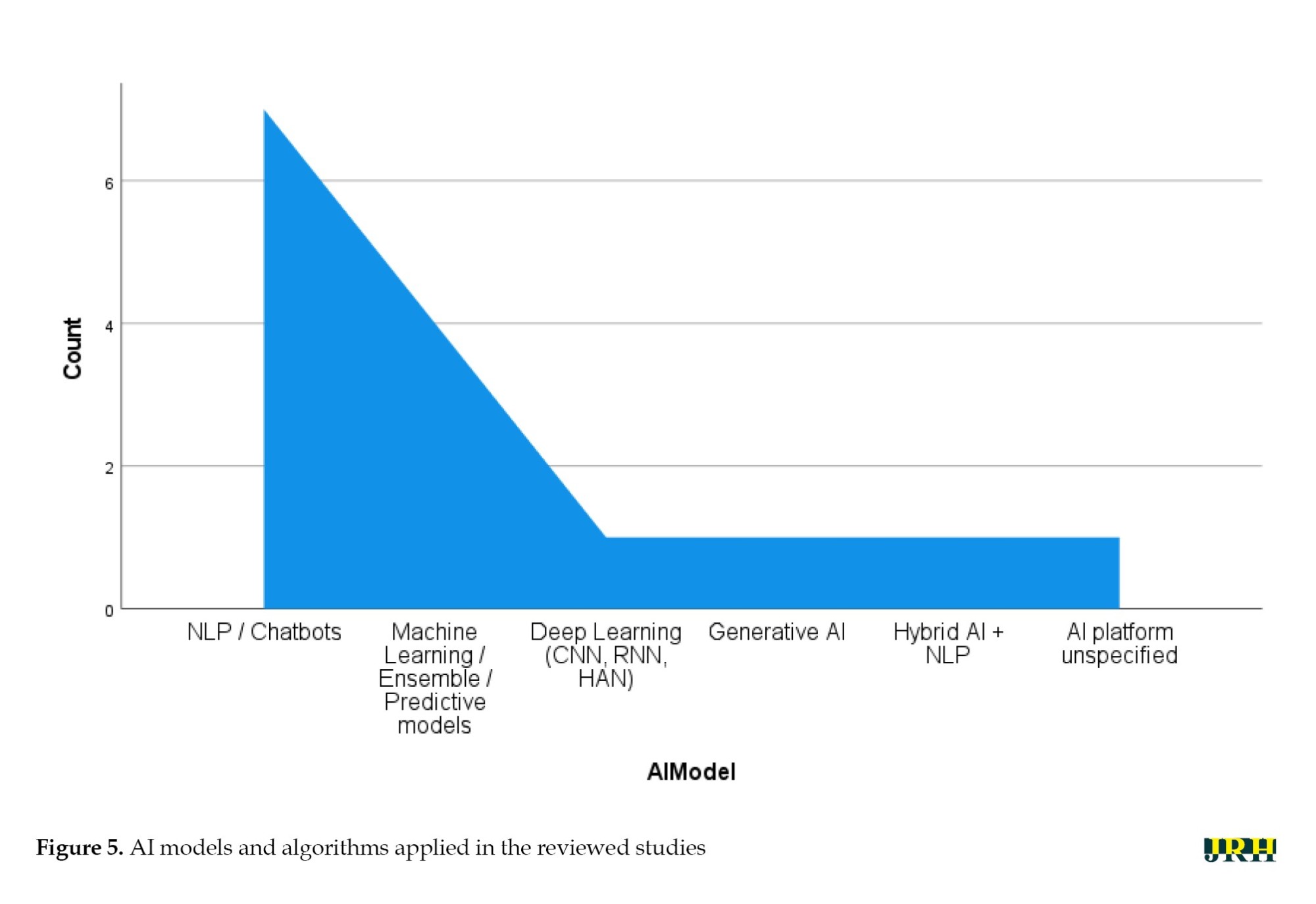

Figure 5 presents the distribution of AI models and computational approaches reported across the analyzed literature. NLP and chatbot-based systems were most prevalent (n=7), emphasizing their role in delivering automated therapeutic dialogue and emotional support. Four studies implemented general ML or ensemble predictive models, focusing on diagnostic prediction and personalized treatment response. One study used deep learning architectures (CNN, RNN, HAN) for emotion classification, and another applied generative AI (ChatGPT-4) to assess its influence on academic and psychological outcomes. A final study integrated hybrid AI and NLP models, reflecting the trend toward multimodal and adaptive AI frameworks in digital psychotherapy.

Discussion

Novelty and significance

This systematic review presents a synthesis of evidence based on 39 studies conducted in various countries across different study designs, including RCTs, observational studies, conceptual analyses, and narrative/systematic reviews. In contrast to other reviews that focused on a specific disorder, a particular AI tool, or a limited group of interventions, this research demonstrates a holistic, integrative view of AI applications in mental health, including diagnostics, monitoring, and intervention. Critically, it emphasizes AI as a supplement to human clinicians and presents hybrid care models as the most effective in terms of the quality of the therapeutic process and efficiency [11, 17, 32]. The broad coverage of this review allows for the identification of global trends, underlying factors, and gaps that have emerged, thereby providing valuable insights for clinicians, policymakers, and researchers planning to incorporate AI into mental health systems [21, 32]. This diversity enables the review to highlight the potential of AI in improving clinical decision-making, patient involvement, and system efficiency.

Advancements and research trends

According to the literature, there has been a swift increase in the interest in AI-based mental health interventions since 2017. Previous works have mainly focused on exploration, small-scale studies, and proof-of-concept, whereas recent articles involve larger sample sizes, multi-site studies, and more sophisticated ML and deep learning algorithms for predictive diagnostics and tailored treatment planning [11, 18, 32]. This trend signifies the maturation of AI methodologies, an increase in the rigor of these methodologies, and a growing belief in their applicability to clinical cases. The advancement from conceptual studies to empirically validated interventions indicates a willingness to implement AI in mental health practice, providing a crucial cutoff point between scalable and evidence-based solutions [3, 12, 17].

AI models and mechanisms

Mental health has been the subject of a wide range of AI solutions. Chatbots using NLP are useful in psychoeducation, monitoring symptoms, and therapeutic interaction [1, 14, 26]. The predictive aspect of diagnosis, risk classification, and ML methods, such as support vector machines, random forests, and neural networks, enable the suggestion of personalized treatment [13, 23, 29]. Adaptive, real-time interventions are made possible by reinforcement learning and AI-human hybrid components that maximize engagement and clinical outcomes while preserving the therapeutic relationship [20, 28, 32]. Taken together, these studies demonstrate that AI is not intended to substitute clinicians but rather to serve as a powerful complement to their practice, enhancing accessibility, accuracy, and customization.

Clinical impact and patient outcomes

AI interventions have consistently demonstrated better scores in diagnostic accuracy and have aided in the timely identification of psychological conditions, especially depression and anxiety [11, 15, 17]. Tailored treatment plans based on behavioral, genetic, and clinical data enhance treatment compliance, patient involvement, and symptom reduction [5, 7, 32]. Chatbots, mobile apps, and web-based therapies provided through digital platforms are scalable, user-friendly, and affordable, especially in areas with a shortage of licensed mental health workers [6, 22]. Hybrid AI-human models do not compromise the ability to achieve treatment efficacy and foster a therapeutic alliance, which is a significant determinant of trust [32, 35]. Overall, the findings discussed highlight the transformative capabilities of AI in mental health care, as it can enhance quality, ethics, and relationships simultaneously.

Ethical, practical, and implementation considerations

Despite such developments, there are major ethical, practical, and implementation issues. Key ethical concerns include the privacy of collected data, algorithmic bias, informed consent, and AI accountability in decision-making [11, 15, 23]. Clinicians’ fears, insufficient training, and integration into existing workflows hinder adoption [8, 34]. Strong methodological rigor is also necessary because of the construct limitations of algorithms and the lack of standard validation systems [20, 31]. These concerns need to be tackled to achieve a safe, equitable, and successful implementation of AI into practice, thereby enabling scientifically sound, ethically responsible, and meaningful interventions.

Conclusion

This systematic review synthesized the existing information regarding the implementation of AI in mental health care, including a wide variety of applications, including, but not limited to, AI-based chatbots, online platforms, predictive modelling, wearable devices, and virtual reality-based interventions. Across diverse populations, study designs, and clinical settings, AI interventions have shown significant potential in increasing the accuracy of diagnoses, tailoring patient therapy plans, improving patient interaction, and enhancing accessibility for individuals with mental health vulnerabilities. It is noteworthy that AI-assisted platforms could result in the early diagnosis and prediction of depressive, anxiety, and stress symptoms, while also supplementing conventional psychotherapy strategies.

Despite these advances, the review found substantial heterogeneity in study designs, sample sizes, populations, AI algorithms, and outcome measures, which hinders direct comparability and possible meta-analytic synthesis. Many studies were based on small or homogeneous cohorts with short-term follow-ups and varied reporting criteria, limiting the ability to draw conclusions about long-term effectiveness, scalability, and sustainability. The responsible implementation of AI in mental health care was challenged by the need to include ethical considerations, transparency, and interpretability, as well as to maintain methodological rigor.

In conclusion, while AI presents groundbreaking opportunities to improve patient-centered mental health care, its full potential cannot be realized without proper longitudinal and large-scale studies. Further research must focus on a broader population diversity, less common disorders, and two-way AI-human interventions using standardized integration protocols. Also, the cost-effectiveness, equity, and long-term outcomes need to be evaluated. By promoting such integration, AI can be utilized safely and optimally in clinical practice, ultimately enhancing personalized, ethical, and accessible mental health care worldwide.

Limitations and future directions

The identified papers demonstrated a substantial opportunity to implement AI in mental health care through chatbots, online services, predictive analytics, and the integration of wearable sensors. Nevertheless, there are various limitations to the generalization and comparisons of the findings. Direct cross-study comparisons and meta-analytic synthesis are not possible due to heterogeneity in study designs, sample sizes, populations, AI models, and outcome measures. Most of the research is based on small, homogeneous, or short-term cohorts, meaning that long-term efficacy, scalability, and sustainability have not been adequately investigated. Unstable reporting, a poor methodological process index, and inconsistent study quality also make interpretation and replication challenging. Future studies in this area should concentrate on large-scale, longitudinal research evaluations of the sustainability of clinical outcomes, cost-efficiency, and accessibility in a wide variety of populations and underrepresented disorders. Prioritizing AI hybrid interventions with standardized integration protocols and interpretable models will help minimize bias, while ethically informed frameworks will be essential to ensure that implementation is fair, safe, and effective. Addressing these gaps will improve the reliability, generalizability , and clinical applicability of AI-based mental health interventions.

Ethical Considerations

Compliance with ethical guidelines

There were no ethical considerations to be considered in this research.

Funding

This research did not receive any grant from funding agencies in the public, commercial, or non-profit sectors.

Authors' contributions

All authors equally contributed to preparing this article.

Conflict of interest

The authors declared no conflict of interest.

Acknowledgments

The authors would like to thank all individuals and institutions that contributed to the completion of this study. Special appreciation is extended to the researchers whose work was reviewed in this systematic analysis.

References

The prevalence of psychological disorders continues to rise globally, imposing substantial burdens on individuals, families, and healthcare systems [1]. Mental health disorders, such as major depressive disorder, generalized anxiety disorder, bipolar disorder, and schizophrenia not only diminish quality of life but also contribute to increased morbidity, disability, and mortality worldwide [2]. Despite advances in psychopharmacology and psychotherapy, traditional mental health care delivery remains challenged by delays in diagnosis, heterogeneity of clinical presentations, limited access to specialized care, and variability in treatment response [3]. These challenges necessitate innovative approaches to enhance early detection, optimize personalized treatment, and improve overall clinical outcomes [4]. Against this backdrop, artificial intelligence (AI) has emerged as a transformative technology with the potential to fundamentally reshape mental health diagnosis, intervention, and management [5].

AI refers to the development of computer systems capable of performing tasks that typically require human intelligence, including learning, reasoning, problem-solving, and pattern recognition [6]. In mental health care, AI encompasses various subfields, such as machine learning (ML), natural language processing (NLP), computer vision, and deep neural networks [7]. These technologies enable the extraction and analysis of complex and high-dimensional data from diverse sources, including electronic health records (EHRs), neuroimaging scans, genetic profiles, speech and behavioral patterns, and digital phenotyping via smartphones and wearable devices [8]. By leveraging this data, AI systems can identify subtle clinical markers, predict disease trajectories, and tailor interventions with unprecedented precision, thereby offering an evidence-based complement to traditional clinician judgment [9].

The increasing global burden of mental disorders is compounded by a significant shortage of qualified mental health professionals, particularly in low- and middle-income countries, and in underserved rural areas [10]. This treatment gap leads to underdiagnosis, delayed interventions, and poor prognosis for many patients [11]. Digital mental health technologies powered by AI offer scalable and cost-effective solutions capable of extending the reach of care beyond traditional clinical settings [12]. For instance, AI-driven mobile applications and conversational agents (chatbots) provide continuous symptom monitoring, psychoeducation, cognitive behavioral therapy (CBT), and crisis support accessible anytime and anywhere [13]. These modalities not only improve patient engagement and adherence but also reduce the stigma and logistical barriers often associated with face-to-face therapy [14].

Despite the significant potential of AI to revolutionize psychological care, its integration raises critical ethical, legal, and practical concerns [15]. The use of sensitive mental health data necessitates robust privacy protections and transparent data governance frameworks to maintain patient confidentiality and trust [16]. Algorithmic bias and lack of representativeness in training datasets can perpetuate health disparities and reduce the validity of AI applications across diverse demographic and cultural populations [17]. Additionally, overreliance on AI could inadvertently diminish the therapeutic alliance, a core factor in successful psychological treatment, by sidelining human empathy and nuanced clinical decision-making [18]. Therefore, ethical frameworks, rigorous validation studies, and clinician training programs are essential to safeguard patient welfare and maximize the benefits of AI-assisted interventions [19].

In recent years, a burgeoning number of empirical studies have investigated the efficacy and utility of AI-based interventions for psychological disorders [20, 21]. Research has demonstrated AI’s capability to improve diagnostic accuracy through automated pattern recognition in neuroimaging and speech analysis, facilitate early identification of prodromal symptoms, and enhance treatment personalization through adaptive algorithms that adjust therapeutic content based on real-time patient feedback [22]. Randomized controlled trials (RCTs) and observational studies have also reported positive outcomes in symptom reduction and functional improvement using AI-facilitated digital therapeutics [23]. However, heterogeneity in study methodologies, sample populations, intervention modalities, and outcome measures poses challenges for drawing definitive conclusions, underscoring the need for systematic synthesis and critical appraisal [24, 25].

Given the rapid integration of AI into healthcare, it is both urgent and essential to understand its true impact on the treatment of psychological disorders [26]. Despite notable technological advances, significant gaps persist regarding the efficacy, safety, and ethical considerations of AI-driven mental health interventions [27]. This systematic review sought to critically synthesize the existing empirical evidence to clarify the benefits, limitations, and practical challenges of AI applications within this field. By doing so, it aimed to equip clinicians, researchers, and policymakers with the knowledge needed to make informed, evidence-based decisions that optimize therapeutic outcomes while safeguarding patient rights and well-being. Ultimately, this work highlights the importance of responsible innovation—balancing cutting-edge technological advancements with compassionate, human-centered mental health care. Through a comprehensive analysis, this review evaluated the effectiveness of AI interventions in treating psychological disorders by drawing upon recent empirical findings.

Methods

Study design

The present study is a systematic review conducted following the PRISMA [28] framework, aiming to investigate existing research on the effectiveness of AI interventions in the treatment of psychological disorders. The research was guided by a structured set of questions addressing key dimensions, such as purpose, target population, timing, and mechanisms of AI-based psychological interventions (Table 1).

Search strategy

A systematic search was conducted using specialized keywords, such as “artificial intelligence,” “machine learning,” “digital mental health,” “psychological disorders,” and “AI-based interventions” across several English-language scientific databases, including Google Scholar, PubMed, ProQuest, Embase, PsycINFO, and Scopus, covering the period from January 2017 to April 2025.

Eligibility criteria

Studies were included if they targeted patients with major depressive disorders and anxiety symptoms and assessed the success of AI-operated solutions in the diagnosis, prevention, or treatment of psychological syndromes. Only articles that utilized quantitative, qualitative, semi-experimental, or experimental designs were included. Additionally, the published works had to meet the criteria for qualified research and be available in full-text format in English. Through this stringent inclusion system, the analyzed information consisted solely of high-quality evidence focused specifically on the role of AI in mental health interventions as guided by the PICOS framework (Table 2).

Risk of bias assessment

The risk of bias among the studies included was also independently assessed by different researchers using commonly available standardized checklists according to the study design used. In the case of quantitative studies, the evaluation criteria were selection bias, performance bias, detection bias, attrition bias, and reporting bias. Qualitative studies were evaluated based on credibility, transferability, dependability, and confirmability. Semi-experimental and experimental trials were also evaluated and rated in terms of randomization and albeit concealment of allocation, blindness, completeness of outcome data, and selective reporting. Conditions where there were discrepancies among reviewers were addressed through discussions and consensus. Studies with a high risk of bias according to the critical areas were impounded, and their impact on the overall findings of the review was considered, with the transparency and methodological rigor being upheld.

Effect measures

The strategy facilitating the assessment of the effect of AI-based interventions was critically evaluated based on the combination of quantitative and qualitative metrics. To conduct quantitative research, the effect measures were accuracy, specificity, sensitivity, area under the curve (AUC), predictive values, mean differences, and effective sizes wherever possible. Thematic analysis, vote of participants, and response behavior or cognitive changes were used in evaluating qualitative studies. To ensure consistency, comparability, and reproducibility of the effect measures across studies, all effect measures were abstracted onto a structured data extraction form. The methodology presented an effective process of synthesizing AI effectiveness and enabled useful cross-study comparisons irrespective of methodological details.

Quality assessment

All retrieved articles were evaluated using keyword searches focused on neuropsychological factors associated with brain abnormalities in patients with major depressive disorder accompanied by anxiety symptoms. To ensure thoroughness, the reference lists of eligible studies were also reviewed for additional relevant sources. Each of the 39 selected articles was independently analyzed by the researchers, who extracted data using a structured content analysis form. Any discrepancies were resolved through consensus, with strict adherence to the inclusion criteria to ensure that only studies addressing the effectiveness of AI interventions in treating psychological disorders were included.

The quality of each study was assessed using a standardized checklist covering various criteria, including the alignment of article structure with the type of study, clarity of research objectives, characteristics of the study population, sampling methods, data collection instruments, appropriate statistical analyses, defined inclusion and exclusion criteria, ethical considerations, accurate reporting of results in line with objectives, and discussion grounded in prior literature [29]. Based on established methodological standards, articles were rated on a binary scale (0 or 1) across relevant criteria for quantitative (6 criteria), qualitative (11 criteria), semi-experimental (8 criteria), and experimental (7 criteria) studies. Studies were excluded if they scored ≤4 in quantitative research, ≤6 in experimental or semi-experimental designs, or ≤8 in qualitative studies [30].

Data collection and extraction

The abstracts of 862 published articles were initially screened, and duplicate entries were systematically removed through multiple stages. Following this rigorous selection and quality assessment process, a total of 39 articles were identified for comprehensive review and data extraction (Figure 1).

Results

The present study systematically reviewed all available English-language articles addressing the effectiveness of AI-based interventions in the treatment of psychological disorders. Table 3 presents a comprehensive summary of these 39 studies, including the authors, purpose, sample characteristics, country or scope, research methodology, and key findings.

Tables 3 and 4 collectively illustrate two complementary strands of research on the integration of AI in psychotherapy.

Table 3 encompasses empirical and data-driven studies that provide measurable evidence of AI’s efficacy in clinical and therapeutic contexts, employing RCTs, observational designs, and psychometric validations to evaluate performance indicators, such as accuracy, sensitivity, and treatment outcomes. In contrast, Table 4 compiles conceptual and review-based works that delineate the theoretical, ethical, and methodological foundations of AI in mental health, yet largely lack experimental validation. This juxtaposition highlights a clear research gap: while empirical studies demonstrate the practical feasibility of AI tools, they often omit deeper theoretical framing, whereas conceptual studies discuss ethical and systemic implications without empirical support. The present study positions itself at the intersection of these two domains, empirically addressing the conceptual and ethical challenges identified in prior reviews and thereby contributing novel, evidence-based insights into the application of AI within psychotherapeutic practice.

Study characteristics

This review encompassed 39 studies from a diverse range of countries, including Iran, the United States, Germany, Denmark, China, India, the United Kingdom, Poland, Australia, Italy, Turkey, Ukraine, and multiple studies with a global or multi-country scope. The study designs span RCTs, cross-sectional surveys, systematic and narrative reviews, conceptual analyses, and feasibility studies. Sample sizes vary widely, ranging from small clinical groups (e.g. 9 to 104 participants) to large populations exceeding thousands (e.g. 4,500 or more app users). The diversity of study designs and populations reflects the broad interest in exploring the integration of AI into mental health diagnostics, treatment, and psychotherapy support worldwide.

Key findings of AI interventions

Key findings consistently highlight the promising potential of AI and ML technologies in enhancing mental health care. AI-powered tools demonstrated improved diagnostic accuracy, facilitated the early detection of depressive and anxiety disorders, and enabled personalized treatment plans based on patient-specific data, including behavioral markers, genetic information, and clinical history. Several studies reported significant reductions in anxiety and depression symptoms with AI-supported interventions, including digital programs, chatbots, and mobile apps, which often offered scalability, accessibility, and cost-effectiveness compared to traditional therapy. Importantly, many studies emphasize the complementary role of AI alongside human clinicians, advocating for hybrid models that leverage AI’s strengths without undermining the essential human connection in psychotherapy.

Despite these advances, multiple studies caution against overreliance on AI, underscoring challenges, such as ethical concerns, data privacy, potential biases in algorithms, and the need for rigorous validation and clinician training. Attitudes toward AI varied, with some clinicians expressing apprehension about replacement fears, while patients often showed greater willingness to engage with AI-based tools, particularly in measurement and monitoring tasks. The literature calls for enhanced education and transparent frameworks to facilitate ethical and effective AI integration, ensuring that technological advancements augment rather than replace human-centered care in mental health practice.

Challenges and future directions

The area of AI has massive potential to transform the delivery of mental health care by allowing prompt diagnosis, individualized therapies, and larger-scale support. There are, however, several critical challenges that are to be sorted out so that safe, effective, and equitable implementation can be realized. Arranging these problems into separate spheres can serve to make it clear of existing limitations are and what future research is.

Ethics and privacy issues

Questions about AI use as mental development tools, such as patient privacy, data security, informed consent, and possible algorithm bias, are highly contentious [11, 15, 23]. Clinical and behavioral sensitive data should be governed with transparency, as it is essential to safeguard users and maintain integrity. Standardized principles of ethical guidelines and regulatory frameworks should be established for AI in mental health devices. It is important to encourage interpretable and recordable AI models, so that both clinicians and patients can understand and challenge decisions. Moreover, future research should be conducted in a culturally sensitive manner to reduce the risk of bias and ensure that interventions are fair to various groups of people.

Methodological heterogeneity and validation

The available literature has a great range of discrepancies in design, sample, AI algorithms, or outcome measures, which deems in comparability and synthesis of meta-analysis [3, 12, 17, 32]. Concise sample sizes supporting small samples, the temporary nature of follow-ups, and a lack of uniformity in reporting hinder confidence in generalizability and clinical applicability. Comprehensive, multi-site, longitudinal studies using a standardized protocol for data collection, evaluation, and reporting are needed. The focus must be on stringent validation of AI models, repeated research, and pre-regulation of research protocols to ensure methodological transparency, reproducibility, and reliable research outcomes.

Clinician education and development

Inadequate knowledge and awareness among the clinical staff members hinder the program implementation of AI tools and integration with an established process, which can decrease usability and engagement [8, 34]. Without proper training, clinicians may underutilize AI suggestions or misinterpret them, negatively affecting patient care. Systematic clinical training capabilities should be designed, including AI literacy, decision support interpretation, and automated workflow integration. Additionally, hybrid care models should be explored that do not replace but support human skills, examining their effectiveness in terms of clinician efficiency, patient outcomes, and therapeutic relationships.

Explainable AI in clinical care: Enhancing trust and performance

Black-box AI models that do not provide explanations for their behavior undermine clinician and patient confidence, ultimately diminishing clinical performance [20, 31]. Ethical deployment requires transparency and facilitation of the therapeutic alliance. It is essential to develop explainable and interpretable AI systems where the validity of predictions and recommendations is clearly provided. Future research must focus on the impact of model transparency on clinicians’ thought processes, patient compliance rates, and overall involvement in the care process. The philosophy of user-centered design needs to be implemented to make AI interventions acceptable and usable for various populations.

Justice, marginalization, and discrimination

Inequality in access to digital health technologies is a possible contributor to further inequalities, particularly among underserved or under-resourced settings and culturally diverse populations [21, 32]. By default, it is possible that AI interventions may favor privileged populations. There should be a focus on inclusive AI design that considers socio-economic, cultural, and geographic diversity. It is crucial to determine the long-term sustainability, scalability, and cost-effectiveness of these interventions to ensure widespread access. Further studies are also required to examine ways to reduce digital literacy barriers and to incorporate feedback from underrepresented users, thus enhancing both usage and performance.

Effects on the long-term results and sustainability

Most publications focus on short-term results, and it is unclear whether AI interventions are durable. Understanding the future effects is essential to determine clinical utility and inform implementation plans [32, 33]. Longitudinal studies should be conducted to assess the continuation of clinical benefits, engagement, and behavioral changes over time. It is important to discuss interventions under real-life conditions, their scalability, their impact on healthcare systems, and potential opportunities for cost savings.

To provide context for the current study, a comparison with previous review papers is presented in Table 5.

This table summarizes key aspects of earlier studies, including the main objective of each review, the type of medical data used, the AI models applied, citation counts, and other relevant information.

Figure 2 displays the overall methodological composition of the reviewed studies. RCTs accounted for the largest proportion (n=8), demonstrating the growing empirical validation of AI-assisted psychotherapy tools. Five studies employed pilot, experimental, mixed-methods, or observational designs, reflecting exploratory and developmental efforts in this emerging domain. Another five studies relied primarily on survey or questionnaire-based data, including psychometric validations assessing user or clinician attitudes toward AI. Six papers were conceptual, theoretical, or literature-based, indicating strong theoretical grounding and ethical discourse surrounding AI in psychotherapy. Finally, one study utilized partial least squares structural equation modeling (PLS-SEM), representing an advanced statistical approach to evaluating the psychological and academic effects of generative AI.

Figure 3 illustrates the international distribution of research on AI applications in psychotherapy and mental health. Out of the 22 primary empirical studies analyzed, the United States accounted for the largest share (6 studies; 27.3%), followed by China (3 studies; 13.6%) and India (2 studies; 9.1%). Single studies were conducted in the United Kingdom, Ukraine, Argentina, Denmark, Italy, Poland, Australia, Iran, Turkey, and Germany (each 1 study; 4.5%). In addition, one study (4.5%) was classified as “Global/International,” encompassing data from multiple regions or cross-cultural contexts. This geographical distribution demonstrates growing global engagement with AI-assisted psychotherapy, though a notable concentration remains in Western and East Asian research contexts.

Figure 4 summarizes the distribution of data sources and participant samples across the included studies. Chatbot- or AI-based therapy data constituted the majority (n=10; 45.5%), reflecting the growing prominence of conversational agents in clinical and subclinical mental health interventions. Survey-, perception-, or attitude-based data were used in five studies (22.7%), primarily to assess user and clinician acceptance, ethical concerns, and perceived utility of AI tools. One study (4.5%) employed multimodal video and physiological data to detect emotional states through advanced computational models, while one study (4.5%) utilized wearable biosensor data for predictive modeling in obsessive-compulsive disorder. Additionally, one study (4.5%) analyzed classroom and educational engagement data, demonstrating the versatility of AI applications beyond traditional psychotherapy contexts.

Figure 5 presents the distribution of AI models and computational approaches reported across the analyzed literature. NLP and chatbot-based systems were most prevalent (n=7), emphasizing their role in delivering automated therapeutic dialogue and emotional support. Four studies implemented general ML or ensemble predictive models, focusing on diagnostic prediction and personalized treatment response. One study used deep learning architectures (CNN, RNN, HAN) for emotion classification, and another applied generative AI (ChatGPT-4) to assess its influence on academic and psychological outcomes. A final study integrated hybrid AI and NLP models, reflecting the trend toward multimodal and adaptive AI frameworks in digital psychotherapy.

Discussion

Novelty and significance

This systematic review presents a synthesis of evidence based on 39 studies conducted in various countries across different study designs, including RCTs, observational studies, conceptual analyses, and narrative/systematic reviews. In contrast to other reviews that focused on a specific disorder, a particular AI tool, or a limited group of interventions, this research demonstrates a holistic, integrative view of AI applications in mental health, including diagnostics, monitoring, and intervention. Critically, it emphasizes AI as a supplement to human clinicians and presents hybrid care models as the most effective in terms of the quality of the therapeutic process and efficiency [11, 17, 32]. The broad coverage of this review allows for the identification of global trends, underlying factors, and gaps that have emerged, thereby providing valuable insights for clinicians, policymakers, and researchers planning to incorporate AI into mental health systems [21, 32]. This diversity enables the review to highlight the potential of AI in improving clinical decision-making, patient involvement, and system efficiency.

Advancements and research trends

According to the literature, there has been a swift increase in the interest in AI-based mental health interventions since 2017. Previous works have mainly focused on exploration, small-scale studies, and proof-of-concept, whereas recent articles involve larger sample sizes, multi-site studies, and more sophisticated ML and deep learning algorithms for predictive diagnostics and tailored treatment planning [11, 18, 32]. This trend signifies the maturation of AI methodologies, an increase in the rigor of these methodologies, and a growing belief in their applicability to clinical cases. The advancement from conceptual studies to empirically validated interventions indicates a willingness to implement AI in mental health practice, providing a crucial cutoff point between scalable and evidence-based solutions [3, 12, 17].

AI models and mechanisms

Mental health has been the subject of a wide range of AI solutions. Chatbots using NLP are useful in psychoeducation, monitoring symptoms, and therapeutic interaction [1, 14, 26]. The predictive aspect of diagnosis, risk classification, and ML methods, such as support vector machines, random forests, and neural networks, enable the suggestion of personalized treatment [13, 23, 29]. Adaptive, real-time interventions are made possible by reinforcement learning and AI-human hybrid components that maximize engagement and clinical outcomes while preserving the therapeutic relationship [20, 28, 32]. Taken together, these studies demonstrate that AI is not intended to substitute clinicians but rather to serve as a powerful complement to their practice, enhancing accessibility, accuracy, and customization.

Clinical impact and patient outcomes

AI interventions have consistently demonstrated better scores in diagnostic accuracy and have aided in the timely identification of psychological conditions, especially depression and anxiety [11, 15, 17]. Tailored treatment plans based on behavioral, genetic, and clinical data enhance treatment compliance, patient involvement, and symptom reduction [5, 7, 32]. Chatbots, mobile apps, and web-based therapies provided through digital platforms are scalable, user-friendly, and affordable, especially in areas with a shortage of licensed mental health workers [6, 22]. Hybrid AI-human models do not compromise the ability to achieve treatment efficacy and foster a therapeutic alliance, which is a significant determinant of trust [32, 35]. Overall, the findings discussed highlight the transformative capabilities of AI in mental health care, as it can enhance quality, ethics, and relationships simultaneously.

Ethical, practical, and implementation considerations

Despite such developments, there are major ethical, practical, and implementation issues. Key ethical concerns include the privacy of collected data, algorithmic bias, informed consent, and AI accountability in decision-making [11, 15, 23]. Clinicians’ fears, insufficient training, and integration into existing workflows hinder adoption [8, 34]. Strong methodological rigor is also necessary because of the construct limitations of algorithms and the lack of standard validation systems [20, 31]. These concerns need to be tackled to achieve a safe, equitable, and successful implementation of AI into practice, thereby enabling scientifically sound, ethically responsible, and meaningful interventions.

Conclusion

This systematic review synthesized the existing information regarding the implementation of AI in mental health care, including a wide variety of applications, including, but not limited to, AI-based chatbots, online platforms, predictive modelling, wearable devices, and virtual reality-based interventions. Across diverse populations, study designs, and clinical settings, AI interventions have shown significant potential in increasing the accuracy of diagnoses, tailoring patient therapy plans, improving patient interaction, and enhancing accessibility for individuals with mental health vulnerabilities. It is noteworthy that AI-assisted platforms could result in the early diagnosis and prediction of depressive, anxiety, and stress symptoms, while also supplementing conventional psychotherapy strategies.

Despite these advances, the review found substantial heterogeneity in study designs, sample sizes, populations, AI algorithms, and outcome measures, which hinders direct comparability and possible meta-analytic synthesis. Many studies were based on small or homogeneous cohorts with short-term follow-ups and varied reporting criteria, limiting the ability to draw conclusions about long-term effectiveness, scalability, and sustainability. The responsible implementation of AI in mental health care was challenged by the need to include ethical considerations, transparency, and interpretability, as well as to maintain methodological rigor.

In conclusion, while AI presents groundbreaking opportunities to improve patient-centered mental health care, its full potential cannot be realized without proper longitudinal and large-scale studies. Further research must focus on a broader population diversity, less common disorders, and two-way AI-human interventions using standardized integration protocols. Also, the cost-effectiveness, equity, and long-term outcomes need to be evaluated. By promoting such integration, AI can be utilized safely and optimally in clinical practice, ultimately enhancing personalized, ethical, and accessible mental health care worldwide.

Limitations and future directions

The identified papers demonstrated a substantial opportunity to implement AI in mental health care through chatbots, online services, predictive analytics, and the integration of wearable sensors. Nevertheless, there are various limitations to the generalization and comparisons of the findings. Direct cross-study comparisons and meta-analytic synthesis are not possible due to heterogeneity in study designs, sample sizes, populations, AI models, and outcome measures. Most of the research is based on small, homogeneous, or short-term cohorts, meaning that long-term efficacy, scalability, and sustainability have not been adequately investigated. Unstable reporting, a poor methodological process index, and inconsistent study quality also make interpretation and replication challenging. Future studies in this area should concentrate on large-scale, longitudinal research evaluations of the sustainability of clinical outcomes, cost-efficiency, and accessibility in a wide variety of populations and underrepresented disorders. Prioritizing AI hybrid interventions with standardized integration protocols and interpretable models will help minimize bias, while ethically informed frameworks will be essential to ensure that implementation is fair, safe, and effective. Addressing these gaps will improve the reliability, generalizability , and clinical applicability of AI-based mental health interventions.

Ethical Considerations

Compliance with ethical guidelines

There were no ethical considerations to be considered in this research.

Funding

This research did not receive any grant from funding agencies in the public, commercial, or non-profit sectors.

Authors' contributions

All authors equally contributed to preparing this article.

Conflict of interest

The authors declared no conflict of interest.

Acknowledgments

The authors would like to thank all individuals and institutions that contributed to the completion of this study. Special appreciation is extended to the researchers whose work was reviewed in this systematic analysis.

References

- Spytska L. The use of artificial intelligence in psychotherapy: Development of intelligent therapeutic systems. BMC Psychology. 2025; 13(1):175. [DOI:10.1186/s40359-025-02491-9] [PMID]

- Sadeh-Sharvit S, Camp TD, Horton SE, Hefner JD, Berry JM, Grossman E, et al. Effects of an artificial intelligence platform for behavioral interventions on depression and anxiety symptoms: randomized clinical trial. Journal of Medical Internet Research. 2023; 25:e46781. [DOI:10.2196/46781] [PMID]

- Zhou S, Zhao J, Zhang L. Application of artificial intelligence on psychological interventions and diagnosis: An overview. Frontiers in Psychiatry. 2022; 13:811665. [DOI:10.3389/fpsyt.2022.811665] [PMID]

- Yeasmin S, Das S, Bhuiyan MF, Suha SH, Prabha M, Vanu N, et al. Artificial Intelligence in Mental Health: Leveraging Machine Learning for Diagnosis, Therapy, and Emotional Well-being. Journal of Ecohumanism. 2025; 4(3):286-300. [DOI:10.62754/joe.v4i3.6640]

- Varghese MA, Sharma P, Patwardhan M. Public perception on artificial intelligence-driven mental health interventions: Survey research. JMIR Formative Research. 2024; 8:e64380. [DOI:10.2196/64380] [PMID]

- Torous J, Linardon J, Goldberg SB, Sun S, Bell I, Nicholas J, et al. The evolving field of digital mental health: current evidence and implementation issues for smartphone apps, generative artificial intelligence, and virtual reality. World Psychiatry. 2025; 24(2):156-74. [DOI:10.1002/wps.21299] [PMID]

- Palmer CE, Marshall E, Millgate E, Warren G, Ewbank M, Cooper E, et al. Combining artificial intelligence and human support in mental health: Digital intervention with comparable effectiveness to human-delivered care. Journal of Medical Internet Research. 2025; 27:e69351. [DOI:10.2196/69351] [PMID]

- Omiyefa S. Artificial intelligence and machine learning in precision mental health diagnostics and predictive treatment models. International Journal of Research Publication and Reviews. 2025; 6(3):85-99. [DOI:10.55248/gengpi.6.0325.1107]

- Olawade DB, Wada OZ, Odetayo A, David-Olawade AC, Asaolu F, Eberhardt J. Enhancing mental health with Artificial Intelligence: Current trends and future prospects. Journal of Medicine, Surgery, and Public Health. 2024; 100099. [DOI:10.1016/j.glmedi.2024.100099]

- Nelson J, Kaplan J, Simerly G, Nutter N, Edson-Heussi A, Woodham B, et al. The balance and integration of artificial intelligence within cognitive behavioral therapy interventions. Current Psychology. 2025; 1-1. [DOI:10.1007/s12144-025-07320-1]

- Mehta A, Niles AN, Vargas JH, Marafon T, Couto DD, Gross JJ. Acceptability and effectiveness of artificial intelligence therapy for anxiety and depression (Youper): Longitudinal observational study. Journal of Medical Internet Research. 2021; 23(6):e26771. [DOI:10.2196/26771] [PMID]

- Lønfeldt NN, Clemmensen LK, Pagsberg AK. A wearable artificial intelligence feedback tool (wrist angel) for treatment and research of obsessive compulsive disorder: Protocol for a nonrandomized pilot study. JMIR Research Protocols. 2023; 12(1):e45123. [DOI:10.2196/45123] [PMID]

- Liu F, Ju Q, Zheng Q, Peng Y. Artificial intelligence in mental health: Innovations brought by artificial intelligence techniques in stress detection and interventions of building resilience. Current Opinion in Behavioral Sciences. 2024; 60:101452. [DOI:10.1016/j.cobeha.2024.101452]

- Fulmer R, Joerin A, Gentile B, Lakerink L, Rauws M. Using psychological artificial intelligence (Tess) to relieve symptoms of depression and anxiety: Randomized controlled trial. JMIR Mental Health. 2018; 5(4):e9782. [DOI:10.2196/mental.9782] [PMID]

- Fiske A, Henningsen P, Buyx A. Your robot therapist will see you now: Ethical implications of embodied artificial intelligence in psychiatry, psychology, and psychotherapy. Journal of Medical Internet Research. 2019; 21(5):e13216. [DOI:10.2196/13216] [PMID]

- Ferreri F, Bourla A, Peretti CS, Segawa T, Jaafari N, Mouchabac S. How new technologies can improve prediction, assessment, and intervention in obsessive-compulsive disorder (e-OCD). JMIR Mental Health. 2019; 6(12):e11643. [DOI:10.2196/11643] [PMID]

- Eid MM, Yundong W, Mensah GB, Pudasaini P. Treating psychological depression utilising artificial intelligence: AI for precision medicine-focus on procedures. Mesopotamian Journal of Artificial Intelligence in Healthcare. 2023; 2023:76-81. [DOI:10.58496/MJAIH/2023/015]

- Shahzad MF, Xu S, Liu H, Zahid H. Generative artificial intelligence (ChatGPT‐4) and social media impact on academic performance and psychological well‐being in China’s higher education. European Journal of Education. 2025; 60(1):e12835. [DOI:10.1111/ejed.12835]

- Danieli M, Ciulli T, Mousavi SM, Silvestri G, Barbato S, Di Natale L, et al. Assessing the impact of conversational artificial intelligence in the treatment of stress and anxiety in aging adults: Randomized controlled trial. JMIR Mental Health. 2022; 9(9):e38067. [DOI:10.2196/38067] [PMID]

- D’alfonso S, Santesteban-Echarri O, Rice S, Wadley G, Lederman R, Miles C, et al. Artificial intelligence-assisted online social therapy for youth mental health. Frontiers in Psychology. 2017; 8:796. [DOI:10.3389/fpsyg.2017.00796] [PMID]

- Bhatt S. Digital mental health: Role of artificial intelligence in psychotherapy. Annals of Neurosciences. 2025; 32(2):117-27. [PMID]

- Bilge Y, Emiral E, Delal İ. Attitudes towards Artificial intelligence (AI) in psychotherapy: Artificial Intelligence in Psychotherapy Scale (AIPS). Clinical Psychologist. 2025; 1-1. [DOI:10.1080/13284207.2025.2515074]

- Liu F, Wang P, Hu J, Shen S, Wang H, Shi C, et al. A psychologically interpretable artificial intelligence framework for the screening of loneliness, depression, and anxiety. Applied Psychology: Health and Well‐Being. 2025; 17(1):e12639. [DOI:10.1111/aphw.12639] [PMID]

- Shafiee Rad H. Reinforcing L2 reading comprehension through artificial intelligence intervention: Refining engagement to foster self-regulated learning. Smart Learning Environments. 2025; 12(1):23. [DOI:10.1186/s40561-025-00377-2]

- Klos MC, Escoredo M, Joerin A, Lemos VN, Rauws M, Bunge EL. Artificial intelligence-based chatbot for anxiety and depression in university students: pilot randomized controlled trial. JMIR Formative Research. 2021; 5(8):e20678. [DOI:10.2196/20678] [PMID]

- Boucher EM, Harake NR, Ward HE, Stoeckl SE, Vargas J, Minkel J, et al. Artificially intelligent chatbots in digital mental health interventions: A review. Expert Review of Medical Devices. 2021; 18(1):37-49. [DOI:10.1080/17434440.2021.2013200] [PMID]

- Jacobson NC, Nemesure MD. Using artificial intelligence to predict change in depression and anxiety symptoms in a digital intervention: evidence from a transdiagnostic randomized controlled trial. Psychiatry Research. 2021; 295:113618. [DOI:10.1016/j.psychres.2020.113618] [PMID]

- Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ. 2021; 372. [DOI:10.1136/bmj.n71] [PMID]

- Bagheri Sheykhangafshe F, Hajialiani V, Hasani J. The role of resilience and emotion regulation in psychological distress of hospital staff during the COVID-19 pandemic: A systematic review study. Journal of Research and Health. 2021; 11(6):365-74. [DOI:10.32598/JRH.11.6.1922.1]

- Sarkis-Onofre R, Catalá-López F, Aromataris E, Lockwood C. How to properly use the PRISMA Statement. Systematic Reviews. 2021; 10(1):117. [DOI:10.1186/s13643-021-01671-z] [PMID]

- Liu H, Peng H, Song X, Xu C, Zhang M. Using AI chatbots to provide self-help depression interventions for university students: A randomized trial of effectiveness. Internet Interventions. 2022; 27:100495. [DOI:10.1016/j.invent.2022.100495] [PMID]

- Karkosz S, Szymański R, Sanna K, Michałowski J. Effectiveness of a web-based and mobile therapy chatbot on anxiety and depressive symptoms in subclinical young adults: randomized controlled trial. JMIR Formative Research. 2024; 8(1):e47960. [DOI:10.2196/47960] [PMID]

- Patel F, Thakore R, Nandwani I, Bharti SK. Combating depression in students using an intelligent chatbot: A cognitive behavioral therapy. Paper presented at: 2019, IEEE 16th India Council International Conference (INDICON); 12 March 2020; Rajkot, India. [DOI:10.1109/INDICON47234.2019.9030346]

- Wagner J, Schwind AS. Investigating psychotherapists’ attitudes towards artificial intelligence in psychotherapy. BMC Psychology. 2025; 13(1):719. [DOI:10.1186/s40359-025-03071-7] [PMID]

- Aafjes‐van Doorn K. Feasibility of artificial intelligence‐based measurement in psychotherapy practice: Patients’ and clinicians’ perspectives. Counselling and Psychotherapy Research. 2025; 25(1):e12800. [DOI:10.1002/capr.12800]

- Das KP, Gavade P. A review of the efficacy of artificial intelligence for managing anxiety disorders. Frontiers in Artificial Intelligence. 2024; 7:1435895. [DOI:10.3389/frai.2024.1435895] [PMID]

- Ricci F, Giallanella D, Gaggiano C, Torales J, Castaldelli-Maia JM, Liebrenz M, et al. Artificial intelligence in the detection and treatment of depressive disorders: a narrative review of literature. International Review of Psychiatry. 2025; 37(1):39-51. [DOI:10.1080/09540261.2024.2384727] [PMID]

- Levkovich I. Is artificial intelligence the next co-pilot for primary care in diagnosing and recommending treatments for depression? Medical Sciences. 2025; 13(1):8. [DOI:10.3390/medsci13010008] [PMID]

- Ebert DD, Harrer M, Apolinário-Hagen J, Baumeister H. Digital interventions for mental disorders: key features, efficacy, and potential for artificial intelligence applications. Frontiers in Psychiatry: Artificial Intelligence, Precision Medicine, and Other Paradigm Shifts. 2019; 583-627. [DOI:10.1007/978-981-32-9721-0_29] [PMID]

- Cruz-Gonzalez P, He AW, Lam EP, Ng IM, Li MW, Hou R, et al. Artificial intelligence in mental health care: a systematic review of diagnosis, monitoring, and intervention applications. Psychological Medicine. 2025; 55:e18. [DOI:10.1017/S0033291724003295] [PMID]

- Beg MJ, Verma M, Verma MK. Artificial intelligence for psychotherapy: A review of the current state and future directions. Indian Journal of Psychological Medicine. 2024; 02537176241260819. [DOI:10.1177/02537176241260819] [PMID]

- Gual-Montolio P, Jaén I, Martínez-Borba V, Castilla D, Suso-Ribera C. Using artificial intelligence to enhance ongoing psychological interventions for emotional problems in real or close to real-time: A systematic review. International Journal of Environmental Research and Public Health. 2022; 19(13):7737. [DOI:10.3390/ijerph19137737] [PMID]

- Tornero-Costa R, Martinez-Millana A, Azzopardi-Muscat N, Lazeri L, Traver V, Novillo-Ortiz D. Methodological and quality flaws in the use of artificial intelligence in mental health research: systematic review. JMIR Mental Health. 2023; 10(1):e42045. [DOI:10.2196/42045] [PMID]

- Cornelis FH, Filippiadis DK, Wiggermann P, Solomon SB, Madoff DC, Milot L, et al. Evaluation of navigation and robotic systems for percutaneous image-guided interventions: A novel metric for advanced imaging and artificial intelligence integration. Diagnostic and Interventional Imaging. 2025. [DOI:10.1016/j.diii.2025.01.004] [PMID]

- Lau Y, Ang WH, Ang WW, Pang PC, Wong SH, Chan KS. Artificial Intelligence-Based Psychotherapeutic Intervention on Psychological Outcomes: A Meta‐Analysis and Meta‐Regression. Depression and Anxiety. 2025; 2025(1):8930012. [DOI:10.1155/da/8930012]

Type of Study: Review Article |

Subject:

● Artificial Intelligence

Received: 2025/07/20 | Accepted: 2025/11/4 | Published: 2025/12/31

Received: 2025/07/20 | Accepted: 2025/11/4 | Published: 2025/12/31

| Rights and permissions | |